In 2019, cognitive neuroscientist Frida Polli wrote in the Harvard Business Review:

“AI holds the greatest promise for eliminating bias in hiring for two primary reasons… 1. AI can eliminate unconscious human bias [and] 2. AI can assess the entire pipeline of candidates rather than forcing time-constrained humans to implement biased processes to shrink the pipeline from the start.” [1]

Five years later, we want to know: Has AI lived up to this promise?

Looking at the data, we believe that while AI presents exciting opportunities for hiring teams, they must approach it cautiously to ensure it doesn’t make hiring bias worse.

How are employers using AI in hiring?

In 2024, IBM surveyed thousands of IT professionals across 20 countries. 42% of organizations with more than 1,000 employees reported actively using AI. Of these, 19% use it for HR and talent acquisition purposes.

Many employers use AI to automate repetitive HR tasks, such as generating candidate update emails, interview scheduling, tracking applicants, and writing job descriptions.

By streamlining the more repetitive parts of the hiring process, employers can reduce time to hire and free up HR managers to focus on the more strategic aspects of their work.

However, when AI is used to make decisions, there are concerns about its ethics, particularly its effect on bias in the hiring process. (IBM’s survey shows that ethical concerns are among the top three reasons some haven’t explored AI yet.)

One form of bias that’s common in hiring occurs when someone decides about a candidate based solely on a first impression. It can lead to discrimination, where candidates are treated differently – typically less favorably – because of characteristics like race, age, gender, or disability.

These concerns are especially relevant when employers use ChatGPT to summarize resumes – including AI-generated resumes – and vet candidates , or implement AI-powered interview tools to analyze candidates’ reactions and interview performance.

Does AI reduce or reinforce bias?

There are arguments on both sides for whether AI helps reduce bias in the hiring process.

How AI reduces hiring bias

Supporters argue that human-run HR processes always involve a risk of bias. When humans make decisions, explicit or unconscious biases can cloud the process.

On the other hand, AI can be designed to disregard candidates’ characteristics that humans may – intentionally or not – consider when making hiring decisions, such as age, race, and gender. There’s also AI-powered software that can remove potentially biased wording from job ads and role descriptions and applicant tracking systems that can remove candidates’ identifying information.

A few studies have shown that AI may reduce hiring bias, for example, in the resume screening stage or when assessing candidates’ suitability for a role. However, these studies highlight the need for further research, as their results largely depended on how the AI model was trained, and, in some instances, this information wasn’t available to researchers.

Another argument in support of AI’s capability to reduce hiring bias is that it can review large numbers of candidates. Whereas HR teams can review tens or several hundred applications, AI can quickly assess hundreds, even thousands, of candidates. The more candidates you can consider in your hiring process, the more diverse your talent pools will be.

But there are two sides to this coin. As the Equal Employment Opportunities Commission highlights, “While AI systems may offer new opportunities for employers, they also have the potential to discriminate.”

How AI makes hiring bias worse

A working paper by researchers at Maastricht University reviewed existing literature on the issue and found three main sources of AI bias.

The main ones are unrepresentative data and training samples. AI models are trained on datasets. If biases are present in these datasets, the AI is likely to replicate them.

A well-known example of this problem is Amazon’s resume screening tool. Amazon was an early adopter of AI-powered solutions and developed its own tool to review resumes. However, the company stopped using it when it discovered the tool was biased against women. Trained on historical job data that mostly represented men, the tool often ruled out suitable qualified female candidates for technical positions.

As Dimitri Krabbenbourg, Senior Account Executive at TestGorilla, explains:

“AI is trained on/with certain groups of people. If you don’t belong to that group of people, you will most likely not fit in as well as people who do, increasing bias in the hiring process.”

Another source of bias is mislabelled outcomes in the training data. An example of this is an AI resume screening tool that identified being named Jared and being on a high school lacrosse team as the factors most indicative of job performance.

Finally, bias in AI can stem from the programmers themselves. AI can inherit the biases of the people who create and train it. This problem is often linked to tech’s lack of diversity. If AI creators don’t reflect their users’ diversity, they may not recognize biases they haven’t personally experienced.

Examples of potential AI hiring bias

Several studies have highlighted the potential of AI to reinforce or exacerbate hiring bias.

1. Disability bias

Research by the University of Washington found that ChatGPT ranked resumes with disability-related awards and credentials lower than identical resumes with these references removed.

The team created six versions of one of the researcher’s resumes, adding different awards and memberships suggestive of various disabilities to each one. Then, the team asked ChatGPT to rank the resumes.

The authors found that “subtle and overt bias towards disability emerged, including stereotypes, over-emphasizing disability and DEI experience, and conflating this with narrow experience or even negative job-related traits.”

Researchers significantly reduced the level of bias by instructing ChatGPT to be less ableist.

2. Bias toward non-native English speakers

A Stanford University study found that seven AI text detectors reached incorrect conclusions about the writing of non-native English speakers. The overall accuracy of this software when assessing essays by US-born writers was “near-perfect.” In stark contrast, 61.22% of the essays written by non-native English speakers were misclassified as AI-generated.

The study’s senior author, James Zou, explained why this happens:

“[These tools] typically score based on a metric known as ‘perplexity,’ which correlates with the sophistication of the writing – something in which non-native speakers are naturally going to trail their US-born counterparts.”

These findings suggest that using AI technology in a hiring context would significantly disadvantage candidates whose first language isn’t English.

3. Racial bias

A Bloomberg experiment revealed that Chat GPT 3.5 demonstrates a racial bias.

Bloomberg tasked ChatGPT with ranking a series of eight resumes for a financial analyst role 1,000 times. These resumes were for equally qualified applicants, and the fictitious names on the resumes were chosen to reflect specific races or ethnicities (based on voter and census data).

The result? Resumes with fictitious names common to Black Americans were the least likely to be picked as the top candidate.

In another study, researchers at the University of Maryland used two facial recognition services, Face++ and Microsoft AI, to analyze the emotional content of NBA players’ photos. They found that both tools recognized “Black players as having more negative emotions than white players.” These findings are directly relevant to hiring, as some employers use facial recognition technology in interviews.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

Why should employers care about the impact of AI on bias?

Reducing the risk of bias in the hiring process ensures candidates have equal access to opportunities and a fair chance of getting hired.

Aside from these important fairness considerations, employers should care about reducing bias in hiring because doing so gives them access to a diverse workforce.

There’s little argument against the business case for diversity:

Analyzing data from 366 public companies worldwide, McKinsey found that those with the highest racial and ethnic diversity are 35% more likely to have financial returns above the average company in their industry.

A diverse workforce helps employers attract top talent. In a Glassdoor survey with 2,745 participants, 76% of job-seekers said a diverse workforce is a key factor when choosing between employers and job offers.

According to Great Place to Work, employees are 5.4 times more likely to stay with their employer long-term when they know they'll be treated fairly.

Using AI in the hiring process also raises concerns for employers about legal risk. Hiring bias can lead to allegations of discrimination based on personal characteristics protected by US civil rights laws, such as Title VII of the Civil Rights Act, the Americans with Disabilities Act (ADA), and the Age Discrimination in Employment Act (ADEA).

We’re already seeing lawsuits against employers who use AI in their hiring practices and the organizations that provide this technology. Here are some examples:

HireVue, a hiring platform used by various large corporations, received a Federal Trade Commission (FTC) complaint regarding the deceptive use of facial recognition technology. The FTC held that HireVue’s use of this technology “violates widely adopted ethical standards for the use of [AI] and is unfair” and that it produced results that were “biased, unprovable, and not replicable.” HireVue later withdrew this technology from its platform.

The American Civil Liberties Union (ACLU) recently filed an FTC complaint against Aon AI, which provides hiring tools, including personality tests and video interview tools. The ACLU alleges that Aon’s hiring tools demonstrate a bias toward individuals based on disability or race, which Aon disputes.

A current lawsuit against Workday alleges the company’s AI screening software tool discriminates against candidates based on race, disability, and age. Workday denies these claims.

iTutorGroup, an online tutoring agency, reached a $365,000 settlement in a first-of-its-kind EEOC lawsuit that alleged the agency’s hiring software screened out female applicants over 55 and male applications over 60.

These risks should encourage employers to take a considered approach to AI in hiring, balancing the technology’s advantages while ensuring fairness to candidates.

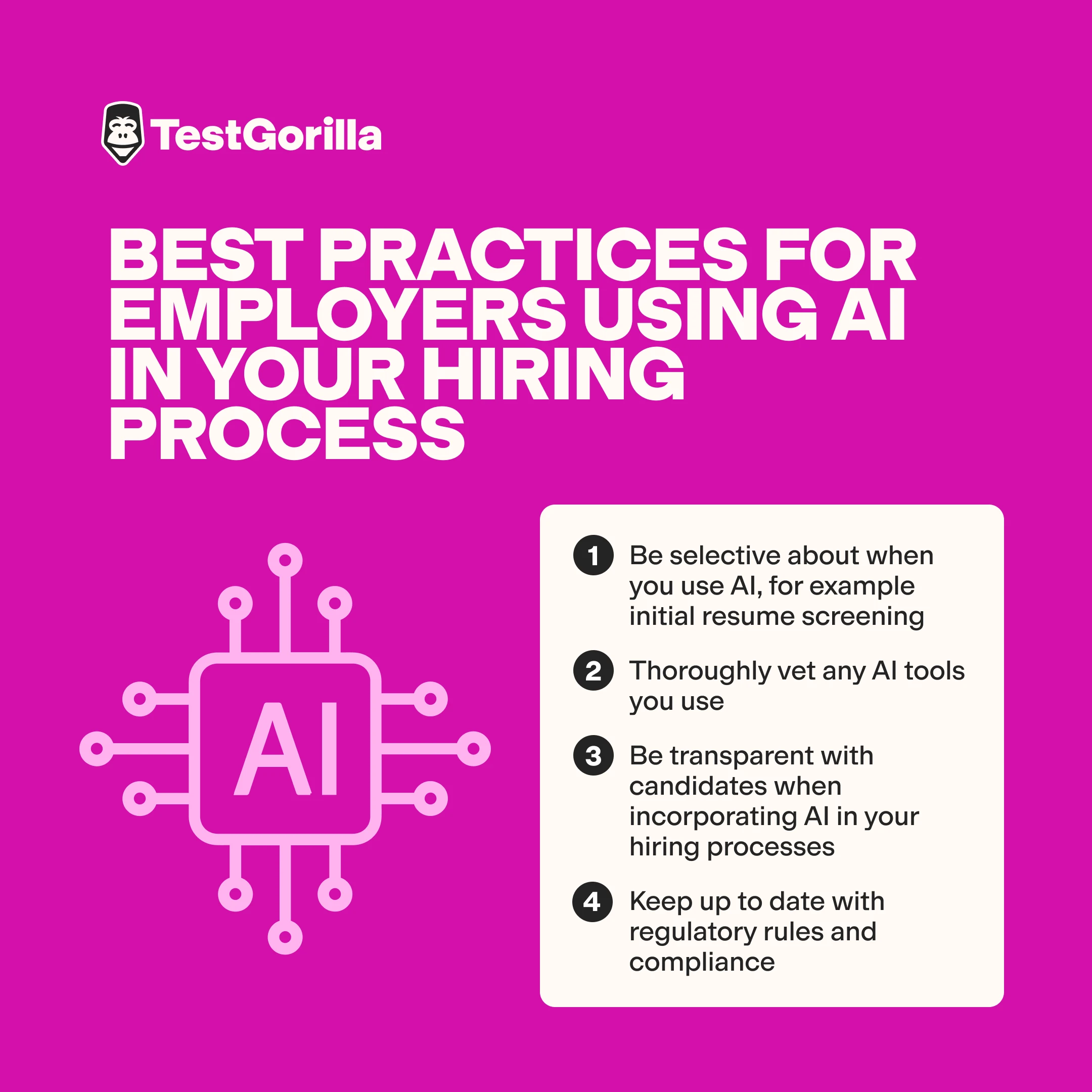

Best practices for employers

Chances are you’re already using or considering using AI in your hiring processes.

While we accept that AI in recruitment is here to stay, we believe there are various steps you can take to reduce the risk of it creating bias.

1. Be selective about when you use AI

Deciding when to use AI in your hiring process can significantly reduce the risk of bias.

For example, given the risk of bias, it’s best not to use AI for initial resume screening. Instead, consider alternatives, such as talent assessments. These tests objectively assess candidates’ hard and soft skills based on psychometric principles, helping identify potential candidates for interviews or shortlisting.

Employees agree that a skills-based approach supports fairer hiring practices. For our 2024 State of Skills-Based Hiring report, we spoke to 1,100 employees worldwide. 84% said that skills-based hiring helps reduce conscious and unconscious bias in the hiring process.

2. Thoroughly vet any AI tools you use

Before choosing an AI tool for your hiring process, reviewing it for potential bias issues is essential (the Society for Industrial and Organizational Psychology (SIOP) recently published a paper which discusses this, and ‘actively pursue ethical and responsible use of AI’ is one of their recommendations).

Knowing where to start to do this may feel challenging, especially if, like most users, you have a limited technical understanding of AI and its algorithms. However, there’s extensive guidance available.

For starters, ask potential providers what bias audits have been done on their software. Also, ask them about their tools’ debiasing processes and built-in bias detection features.

Vendors should be able to provide you with explanations and documentation showing how their tools comply with various ethical AI industry standards, including the Organization for Economic Cooperation and Development’s (OECD) AI principles.

You can also conduct your own audit. The Data and Trust Alliance – a group of CEOs committed to the responsible use of AI – has developed Algorithmic Bias Safeguards that set out criteria employers can use to evaluate the software they’re using. In addition, there’s a cottage industry of psychometricians who will conduct these audits as a consultant.

Vetting your AI tools not only ensures fairness for candidates but also helps you meet your compliance obligations. In the event of an EEOC complaint, you must show the information you relied on to make a hiring decision. To do this, you’ll need to understand the processes behind the software you use during recruitment and ensure they don’t rely on biases.

3. Be transparent with candidates

A key element of incorporating AI in your hiring processes is telling candidates you’re doing so. This allows candidates to make an informed decision about participating in your recruitment.

If successful candidates unknowingly participate in a hiring process that uses AI and later find out, this may undermine the trust in the employer-employee relationship, affecting employee retention.

For an example of what not to do, read this employee’s account of being interviewed by an AI bot – and only realizing it after the interview started.

4. Keep up to date with regulatory rules and compliance

Regulations and guidelines are already in place for the responsible and fair use of AI in the workplace. By staying informed about these, you can ensure your use of AI is compliant and reduce the risk of bias in your hiring process.

For general guidelines on the ethical use of AI, see the Biden administration’s Blueprint for an AI Bill of Rights. This document includes five principles to consider when designing and using AI, including in the context of employment decisions. The OECD’s AI Principles also contain a provision addressing “human-centered values and fairness.”

Additionally, the EEOC has a technical assistance guide on how AI tools may disadvantage those with disabilities and how employers can reduce this risk.

Also, keep an eye on emerging state laws regarding employers’ use of AI. For instance, as of July 2023, New York City has a law requiring employers to audit any automated employment decision tools they use for bias and disclose their use to candidates.

In Illinois, employers must obtain candidates’ informed consent before using AI video interviews. If an employer relies solely on AI analysis of video interviews to decide whether to interview the candidate in person, it must collect and report candidates’ demographic data to the state government, which will analyze it for potential racial bias. Various other states are considering related legislation.

Leveraging AI and human judgment for fairer outcomes

AI offers exciting possibilities for the future of hiring, but we must approach its use cautiously. Ensuring AI doesn't reinforce or worsen hiring bias requires thoughtful implementation, continued oversight, an emphasis on skills-based hiring, and compliance with emerging laws and regulations.

By blending technological innovation with human judgment, we can create a more fair and equitable hiring process that leverages the strengths of both.

[1] Harvard Business Review, Using AI to Eliminate Bias from Hiring, https://hbr.org/2019/10/using-ai-to-eliminate-bias-from-hiring.

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.