Employee rights and AI: How to use new tech ethically in the workplace

A recent McKinsey survey of 1,363 employees worldwide found that 72% of businesses now use AI. [1]

This is hardly surprising given the competitive advantages the technology offers. However, with strict regulations and close scrutiny, how can you take advantage of AI without infringing on your employees’ rights?

In this article, we explore this question and share strategies to help your organization take a considerate, ethical approach to using AI.

What does AI in the workplace look like?

We’re in the midst of an AI revolution at work, with the technology increasingly used to hire and manage staff. According to a recent survey of 2,366 HR professionals by the Society for Human Resource Management (SHRM), one in four employers use AI in HR-related activities.

Hiring is one of AI’s most popular use cases, with 64% of these employers using it for talent acquisition. Generative AI tools like ChatGPT, AI-powered applicant tracking systems, and HR analytics platforms are becoming increasingly popular among employers. Employers use AI to scan resumes to screen candidates, write and send candidates updates, and analyze interviewees’ reactions and responses.

AI’s popularity is growing rapidly. A Gartner survey of 179 HR leaders found that 38% of HR leaders are trialing, planning to use, or already using generative AI – double the number from only six months ago.

It’s easy to understand why. According to the authors of a 2024 study on the impact of AI on the workplace and employees’ digital wellbeing, AI can potentially:

Boost efficiency by streamlining activities and processes

Reduce the risk of human errors

Enhance decision-making

Support creativity and innovation by automating routine tasks

From an HR perspective, AI can help reduce time-to-hire and cost-of-hire and aid better hiring decisions.

AI and employee rights - key concerns

Despite AI’s benefits, there are several concerns when it comes to its use and employees’ legal rights. Here are some.

1. Discrimination

One of the main ways AI can infringe on employees’ rights is discrimination due to bias.

Several sources of AI bias exist. For example, generative AI is trained on large datasets. If those datasets contain biases, the biases can appear in the AI’s output.

A well-known example occurred at Amazon in 2018. The company discovered that the AI-powered resume review software it built displayed a gender bias, preferring male candidates over female ones. This resulted from the tools’ training data – resumes from the previous ten years that were submitted mostly by men due to the nature of the tech industry.

Biases can also stem from the algorithms themselves due to developer bias.

And AI bias can lead to legal issues, especially if it violates anti-discrimination laws.

In the US, there are several key pieces of anti-discrimination laws at the federal level, including:

Title VII of the Civil Rights Act.

Age Discrimination in Employment Act (ADEA).

Americans with Disabilities Act (ADA).

Equal Pay Act (EPA).

Anti-discrimination laws also exist at the state or local level, and many other countries have similar regulations. Employers should be aware of their obligations under these when considering AI’s impact on employees.

2. Labor rights

Workplace AI can also potentially conflict with labor laws.

For example, employers relying on AI to make decisions about performance management or termination could face unfair dismissal or unlawful termination claims. Employees could say the AI is biased, made errors, or didn’t fully consider their situation.

Uber recently faced a situation like this in the Netherlands when two drivers claimed an algorithm fired them. Although the court found the drivers weren’t subject to automated decision-making, Uber was ordered to provide further documents to the drivers to ensure transparency. This case – though based on data protection law rather than labor protections – highlights the growing concerns about labor rights issues like unfair dismissal and AI.

In the US, the federal agency that oversees collective bargaining and fair working conditions has raised other concerns about the impact of AI on labor rights. For example, if employers use AI to monitor productivity by recording employees’ conversations or taking webcam shots, it could violate employees’ right to discuss pay and work conditions privately.

3. Employee privacy and data security

Using AI to track employees’ productivity is becoming increasingly popular. According to the SHRM’s survey, 25% of employers use AI for performance management (the third most popular use case for AI in HR).

Some argue this approach helps refocus employees’ attention, spotlights underperforming employees who need support, and prevents time theft. But others say it makes employees anxious, affects their morale, and is a potential breach of privacy. This is because AI may collect sensitive personal data beyond work-related activities.

When using AI like this, employers must comply with relevant data protection laws. Amazon France recently learned this the hard way. France’s data protection agency fined the company €32 million for tracking its employees in an excessively intrusive way that was in breach of the General Data Protection Act (GDPR).

Data protection laws you should consider include the:

Some data protection laws address rights related to AI use. For instance, Article 22 of the GDPR states that decisions impacting EU citizens can’t be based solely on automated processing, which includes AI decision-making without human input.

4. Job security

A big employee concern is the risk of AI taking their jobs. In recent research, Ernst & Young spoke to 1,000 US workers and found that 65% are anxious about AI replacing them.

We can see why: Many workers are concerned about AI’s impact on fair wages, reasonable working hours, and job stability. Plus, a job often goes beyond earning a paycheck – it also gives individuals a sense of worth and purpose.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

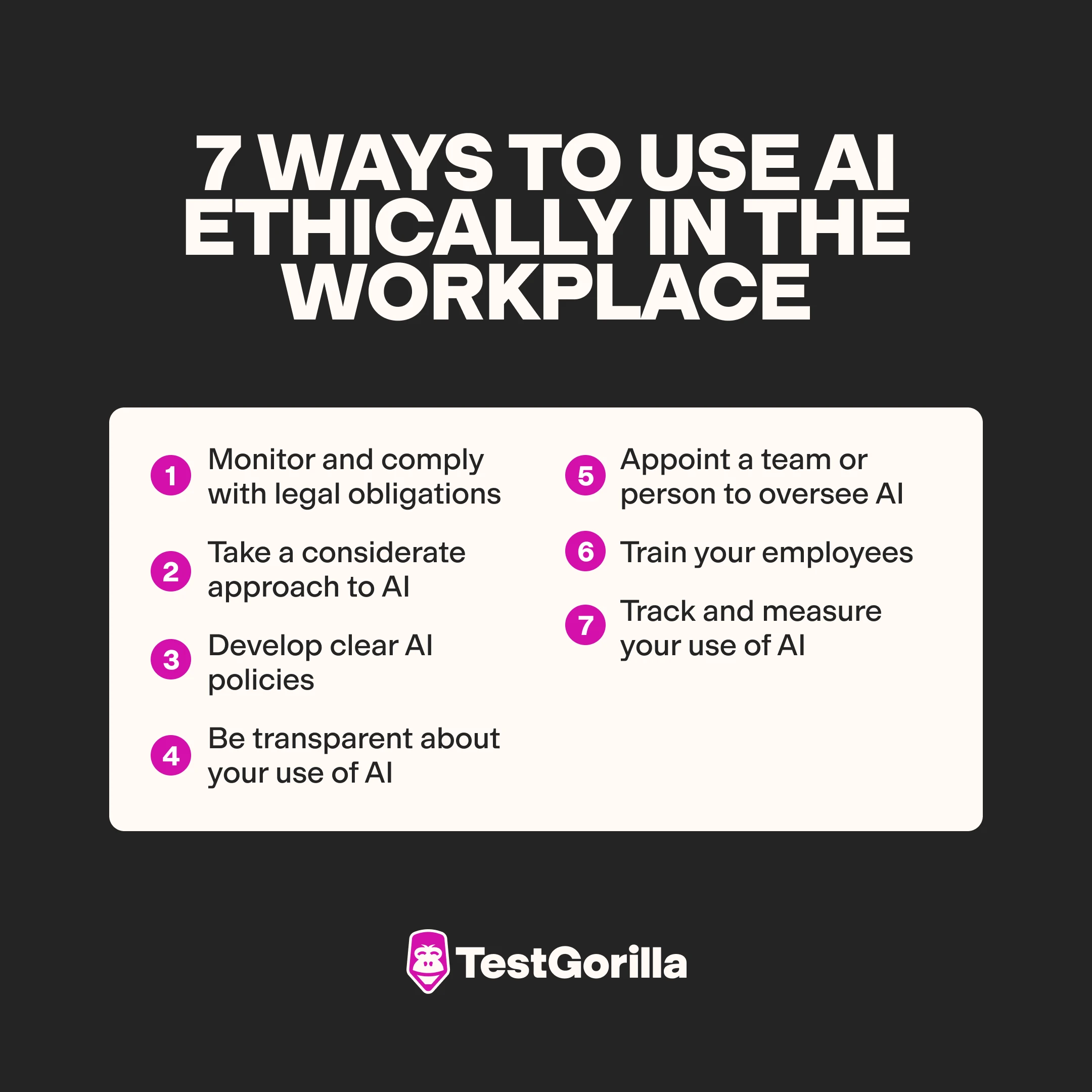

7 ways to use AI ethically in the workplace

You can take advantage of the benefits AI offers while protecting your employees’ rights in various ways.

1. Monitor and comply with legal obligations

As an employer, you must comply with laws regulating your AI use. Failing to do so might mean you use AI unethically, infringe on your employees’ rights, and potentially face penalties.

In addition to the general anti-discrimination, labor, and privacy laws discussed above, some jurisdictions have introduced specific AI laws. For example, Illinois has two AI laws:

The Artificial Intelligence Video Interview Act (AIVIA) requires employers to obtain informed consent from candidates before using AI to assess them. If an employer relies solely on an AI video interview assessment to make an interview decision, they must record and report the candidate’s race and ethnicity for bias auditing purposes.

A recent amendment to the Illinois Human Rights Act prohibits employers from using AI that unlawfully discriminates against candidates or employees based on a protected class or a zip code used as a substitute for a protected class. Employers must also notify candidates and employees when using AI in relation to any employment decisions. This law is due to start on January 1, 2026.

Similar laws are being considered in other US states, including California, Georgia, Hawaii, and Washington – as well as in the UK.

This landscape is rapidly evolving, so staying current with any legal developments in this space that affect your business is essential. Subscribe to employment law newsletters, monitor state government websites, and speak to an employment attorney.

2. Take a considerate approach to AI

When using AI in your organization, recognizing its limitations is crucial. AI can reinforce biases and make up information if it doesn’t know the answer (a phenomenon called “hallucinating”). Plus, AI lacks essential human traits for managing employees, such as empathy, creativity, and nuanced judgment.

So, AI isn’t always a good solution. As discussed, AI screening in the early stages of hiring can result in discrimination (and missing out on top candidates). So these decisions may be better left to humans or other more suitable tools that are designed to reduce hiring bias – like skill tests.

There are also several safeguards you can use alongside AI to protect your employees’ rights. For instance, use human oversight and input in any AI-driven decisions. And when it comes to recruitment, use skills-based hiring.

Rather than relying solely on algorithms that look for educational background and past job titles to find the right candidates, skills-based hiring assesses each candidate’s specific skills and competencies needed for the job. Focusing on these objective criteria helps counteract biases in AI tools and supports fair hiring.

3. Develop clear AI policies

Policies provide your organization with a blueprint for using AI ethically. A good starting point is developing an internal AI bill of rights that sets out guiding principles for using AI in your organization.

From this, you can create AI-related policies or adapt your existing policies to address the use of AI. Policies you may need to review include:

AI governance, including guidelines and principles for adopting and managing AI in your organization

Ethical use of AI, including topics like transparency, accountability, and fairness

Privacy and data security, such as how employee data collected by AI will be used, stored, and shared

Dispute resolution processes that outline how employees can contest the use of AI or AI-driven decisions

Regularly review your AI-related policies and update them as needed.

Here are several valuable resources you can use to develop your AI bill of rights and policies:

EEOC’s technical assistance guide for assessing the adverse impact of AI in employment selection procedures

US Federal Government’s Blueprint for an AI Bill of Rights, which sets out key principles to consider when creating or using AI

US Department of Labor’s key principles for using AI in the workplace

4. Be transparent about your use of AI

Some states already have laws that require employers to notify candidates or employees of their use of AI (NYC and Illinois, for example, both have AI transparency laws). However, even without legal obligations, you should still be transparent with your employees about how you use AI and how it affects them.

Failing to do so can undermine employee trust, which has a direct impact on workplace performance. For instance, a study exploring the relationship between employee trust in management and workplace management looked at data from a UK survey of 2680 workplaces. It found that higher trust is linked with better financial performance, labor productivity, and product or service quality.

Ways you can support transparency around AI include informing candidates about your use of AI during hiring. This allows them to make an informed decision about whether they continue with the process. You can also make your AI policies available to all employees by including them in your onboarding materials and intranet.

We spoke to Rafi Friedman, who explained how his company, Coastal Luxury Outdoors, approaches transparent AI use:

We make sure our employees all know when, how, and why we use AI throughout our operations, including in HR. We use it right now to track things like time on task metrics, and when we have conversations with employees about their focus and productivity, we always lay all our cards on the table and give them a chance to explain any issues we've found.

5. Appoint a team or person to oversee AI

By having a dedicated person or team to oversee AI, you can reduce the risk of it violating your employees’ rights.

They can:

Consider when and how AI should be used in your organization.

Assess the potential impact of AI on employees’ rights and come up with measures to safeguard them – for example, by requiring human oversight where it’s used.

Monitor and review the implementation and use of AI in your organization. Their findings and reports could be made available to employees to help maintain transparency and trust.

6. Train your employees

According to the SHRM, almost 30% of organizations that use AI provide their employees with AI-related training and upskilling. This means there are 70% that aren’t – and are missing out on the benefits.

The purpose of delivering AI training to your employees is twofold. First, it helps them understand how the technology works, how to maximize its use, and how it can benefit them and the organization – encouraging uptake. Explaining the benefits and limitations of AI also helps reassure employees of their job security. For instance, training can show how AI can enhance workers’ capabilities and help their roles evolve rather than take their jobs.

Beyond this, training can also help employees learn how to use AI in the workplace ethically. Ernst & Young’s data reveals that around two-thirds of workers are anxious about their lack of knowledge about how to use AI ethically, with 80% saying more workplace training would reassure them about using AI.

7. Track and measure your use of AI

Employers who use AI in the workplace should conduct regular audits to review the impact of the technology. These audits should review HR data – including promotion rates, performance evaluations, hiring patterns, and diversity metrics – to identify instances of bias or discrimination.

Shahrukh Zahir, Founder and CEO of recruiting platform Right Fit Advisors, spoke to Forbes about the value of involving employees in the audit process. He says, “Their insights on ethical considerations and potential pitfalls can be invaluable.”

While internal audits with team involvement can be really valuable, third-party AI bias audits are also important for staying objective and meeting legal standards. Such audits might be legally required in some places either now or in the future (for instance, New York employers must use these where they use tools to automate employment decisions).

Picking the right auditor is essential. Vice president of legal for the talent intelligence platform SeekOut, Sam Shaddox, says, “A third-party AI bias assessment has to be conducted by a neutral third party that has expertise in AI, data analysis, relevant regulations and industry frameworks.”

Why you should balance AI and employee rights

Caring about your employees doesn’t just help them – there’s a strong business case for considering the potential impacts of AI on your workers.

1. Reduces your legal risk

Although AI-specific laws are just now gaining traction, we’re already seeing examples of how existing regulations – like anti-discrimination laws – provide employees with a potential basis for discrimination claims regarding their employers’ use of AI.

For instance, iTutorGroup, an online tutoring agency, was the subject of an EEOC lawsuit alleging its software demonstrated age bias by automatically rejecting applications from female applicants over 55 and male candidates over 60. The resolution? A $365,000 settlement.

Understanding how AI impacts your employees’ rights can help you decrease your legal risk.

2. Improves ROI on AI tools

Incorporating AI into your HR practices takes time and money. You must invest in technology, employee training, and ongoing monitoring and maintenance.

From a business perspective, your AI must deliver a solid return on investment. Using it ethically is one way to do this.

Here’s why: When your employees are comfortable using the technology, it's more likely to achieve its intended goals. According to Ernst & Young data, 77% of employees would feel more comfortable about AI in the workplace if senior management promoted its ethical and responsible use.

The authors of a study on the impact of AI on the workplace and employees’ digital wellbeing explain, “Fostering trust in AI as a collaborator [is] crucial for a smooth transition and optimal performance in the AI-powered workplace.”

3. Supports workplace diversity

Without finding the right balance between your use of AI and your workers’ rights, you risk limiting the diversity of your workforce through AI-perpetuated discrimination. This is a big misstep given the benefits diversity offers businesses, including:

Greater creativity and innovation

More engaged employees who are less likely to leave

Striking a balance between AI and employee rights

AI presents exciting opportunities for employers. But it also comes with risks, especially regarding your employees' rights.

To use AI effectively while safeguarding your employees’ rights, consider adopting an AI bill of rights, being transparent about its use, and appointing a dedicated team to oversee its application. Also, remember that AI isn’t always the best solution in the workplace. Other tools and practices, like skills-based hiring, can prevent or counteract AI issues.

The main message? Make the most of AI by using it ethically, supporting your employees’ rights, and maintaining their trust.

Disclaimer: The information in this article is a general summary for informational purposes and is not intended to be legal advice. Laws are subject to constant change, and their applications vary based on your individual circumstances. You should always seek legal advice from a qualified attorney about your legal obligations as an employer. While this summary is intended to be informative, we cannot guarantee its accuracy or applicability to your situation.

[1] McKinsey, The state of AI in early 2024 (2024)https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.