As diversity, inclusion, and equity continue to take the spotlight of social justice conversations, hiring managers are becoming concerned with the biases within the recruitment process.

We already know that the traditional unstructured interview is a nightmare for unconscious bias (and doesn’t hold weight in terms of its ability to predict workplace success).

Many recruiters have turned to skills-based testing to reduce the likelihood of such biases hurting hiring outcomes, taking advantage of the efficiencies this approach to hiring brings in the meanwhile.

But critics of skills-based hiring have claimed that these tests may not be bias-free.

Sure, an anonymous test could not suffer from accent bias, and it could be free of the unconscious bias that creates differential judgments for applicants of different genders or cultural backgrounds that differ from that of the assessor.

But could there be ways an anonymous test could still produce biased results?

Researchers seek to eliminate bias in the experiments they design, so it only stands to reason that those biases could exist within the world of pre-employment testing, right?

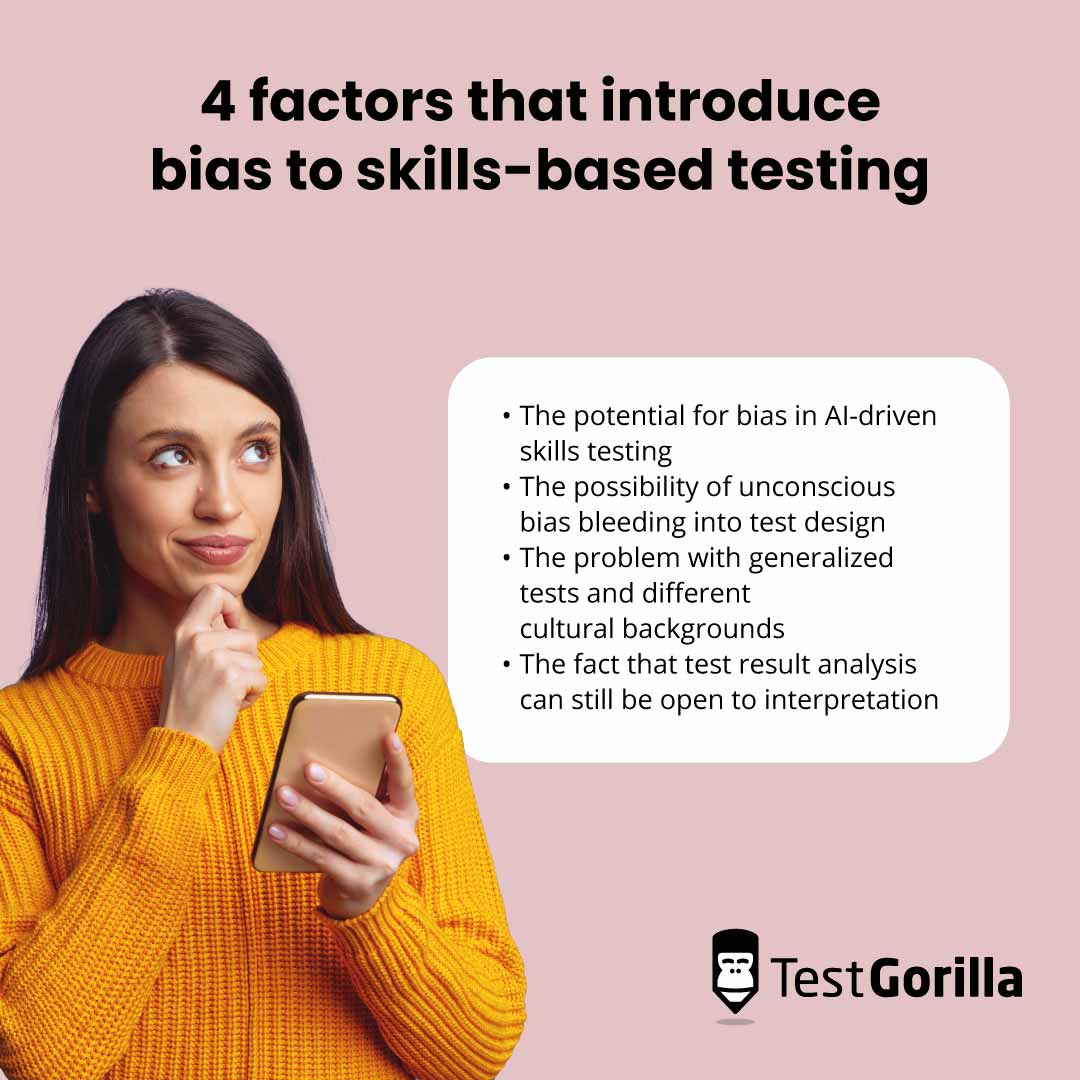

In this article, we’ll explore that claim across four dimensions:

The potential for bias in AI-driven skills testing

The possibility of unconscious bias bleeding into test design

The problem with generalized tests and different cultural backgrounds

The fact that test result analysis can still be open to interpretation

A quick note, though:

You could have noticed that we are a company that offers online pre-employment testing. We’re proponents of the method and have skin in the game.

Our goal in this article is not to attack and write off any valid existing criticism but to explore the legitimacy of such concerns and provide guidance on the limitations of skills testing under special circumstances.

With this in mind, let’s explore the question together:

Can skills-based tests still be biased?

Table of contents

Let’s get some definitions out of the way

Before we look at the potential for bias to exist within the realm of skills-based testing, we must all understand what we mean when we say “skills testing” and “bias.”

Skills testing: What does it mean?

Skills testing is the use of structured, objective assessments to determine the specific skills and abilities relevant to the requirements of a job.

Let’s say, for instance, you’re hiring a developer.

If you require a successful applicant to be able to code in CSS, you would use an online CSS test to gauge their abilities according to predefined criteria.

For a deeper dive into the subject, check out our guide: Your hiring team’s guide to pre-employment skills testing.

Bias: What does it mean?

In the context of testing, bias exists when an assessment produces results that are systematically unfair to a particular group.

Bias in testing is free from intent. That is, a test is biased if it produces unfair results, even if the test creator had no intention to do so when designing the test.

However, not every test that produces different results between particular groups is biased.

For example, a test designed to measure grip strength would show a large effect of gender on maximal handgrip effort, but this doesn’t necessarily mean the test is biased against women.

However, a test designed to assess an applicant’s CSS skills that produced results that are systematically different between male and female test takers includes some form of bias in the test construction.

Okay, with those definitions out of the way, let’s look at how skills-based tests could be subject to bias.

The potential for bias in AI-driven skills testing

Organizations are rapidly integrating artificial intelligence into many aspects of the workplace, and the world of hiring and recruiting is no exception.

On the surface, this appears like a good thing.

AI is free from the kinds of biases that plague humans, right?

As it turns out, no.

In 2018, Amazon scrapped an AI-equipped applicant tracking system (ATS) platform after it discovered that the AI’s algorithm had a significant bias against women.

The company had trained the ATS by observing patterns in resumes it had received and judged over the previous 10 years. The AI incorporated that bias into its decision-making processes because the business already had a history of favoring male applicants.[1]

This story reveals a major issue with using AI in the hiring process: If you train the AI on data sets that already exhibit some form of bias, it integrates that bias into its algorithm.

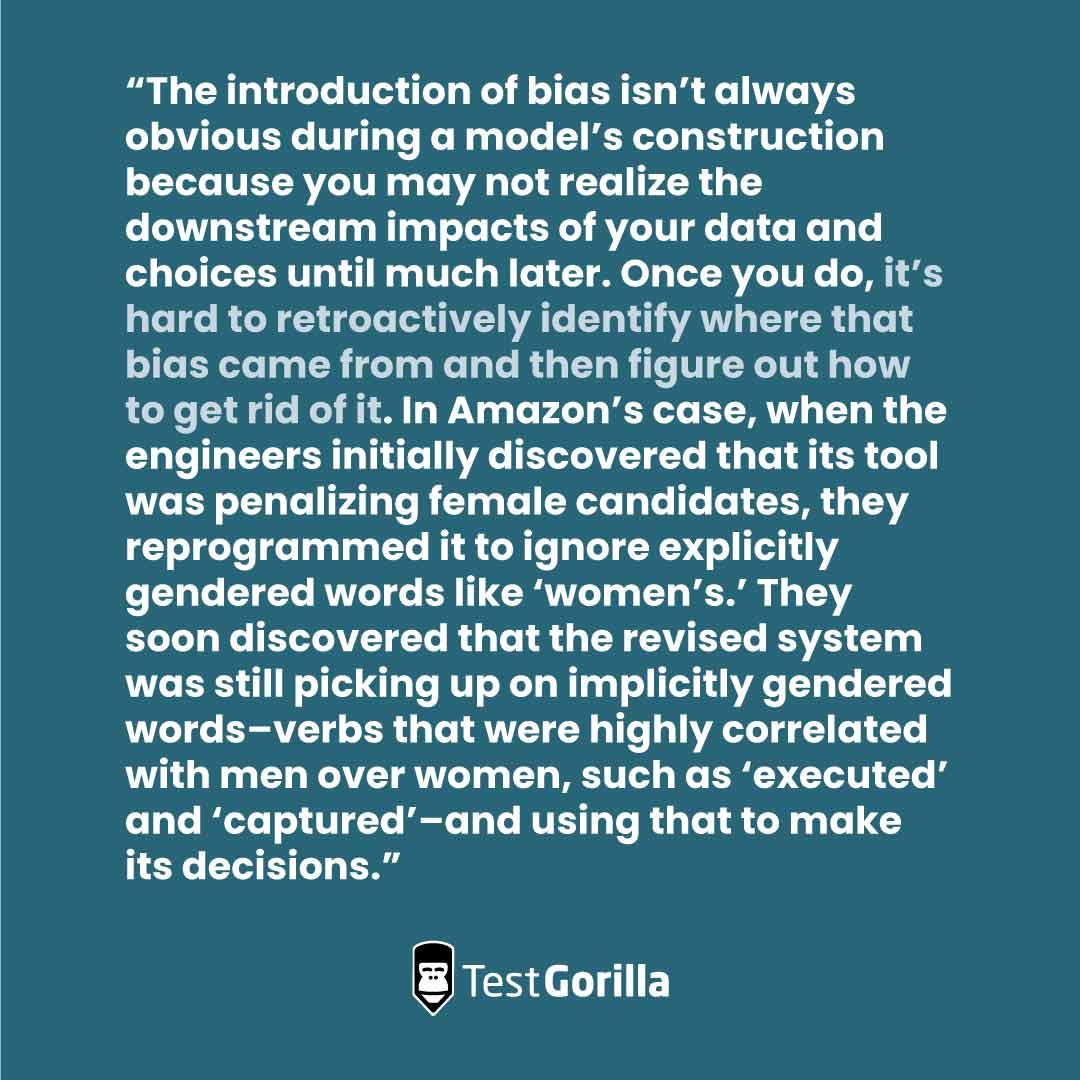

Researchers from MIT point out that AI doesn’t necessarily eliminate bias and that it can be difficult to get rid of:

That’s why, at TestGorilla, we’ve decided to go down a non-AI route when designing our pre-employment tests.

Subject matter experts with extensive backgrounds, experience, and education in the relevant field design our online assessments.

For example, our Financial Due Diligence test was created by a chartered accountant with more than 15 years of experience, an MBA, and a diverse background, including university lecturing (learn more about the science behind our skills-based tests here).

Of course, this doesn’t mean that AI has no place in hiring. It can be useful for scoring pre-employment tests or sending automated updates.

Discover how to use AI and automation to the best effect in our guide: With great power comes great responsibility: How to automate recruitment wisely.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

Human unconscious bias can bleed into test design

So much for unbiased tests from our machine friends. What about human-made assessments? If humans can make biased decisions unconsciously, can we unknowingly create biased tests?

The simple answer is yes; human unconscious biases can carry over into test design.

If the assessment creators aren’t experts in the field or if they don’t consider cultural and linguistic norms and differences, their bias affects the test.

Take, for example, the idiom “comparing apples with oranges.” The phrase is commonly used among native English speakers but could be a bewildering statement to non-native speakers, even if their English is otherwise proficient.

Even small inclusions such as these can have biasing effects.

In this instance, a non-native applicant who had trouble understanding the phrase could fare worse on a test than someone familiar with the idiom.

This situation could be valid when the test is a colloquial English Proficiency test but would be considered a form of bias in the context of a coding test.

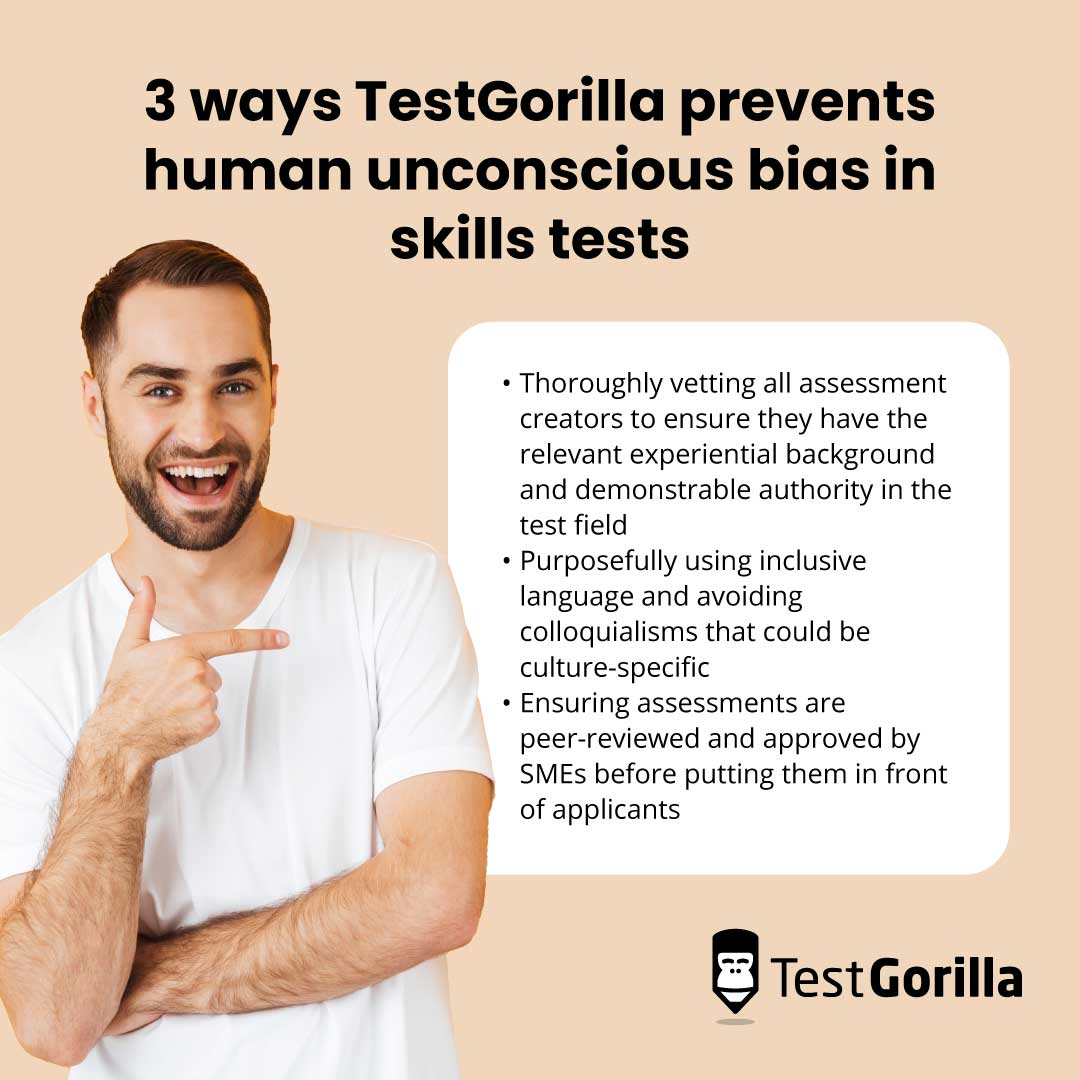

At TestGorilla, we prevent such biases from arising by:

Thoroughly vetting all assessment creators to ensure they have the relevant experiential background and demonstrable authority in the test field

Purposefully using inclusive language and avoiding colloquialisms that could be culture-specific

Ensuring assessments are peer-reviewed and approved by SMEs before putting them in front of applicants

Generalized tests may not be valid for everyone

Recruiters widely use generalized psychological and cognitive ability tests, such as the general mental aptitude (GMA) assessment.

However, as SHRM notes, some hiring managers take issue with such tests, claiming they produce adverse effects.[2]

This area is the subject of ongoing debate.

The main criticism against GMA and other generalized cognitive tests is that they have been developed primarily within the context of WEIRD (Western, educated, industrialized, rich, democratic) societies and may not universally apply to other cultures.

Some researchers note that “research shows that the tools we have to detect bias in hiring assessments may not be sufficiently sensitive.”[2]

However, many studies have attempted to confirm the criticism but have found insufficient evidence. For instance, a 2014 study by Roth et al. suggests “the concept of differential validity may be largely artifactual and current data are not definitive enough to suggest such effects exist.”[3]

As the jury is still out on this subject, the best approach is to consider all the points of view.

Use generalized cognitive ability tests when it’s relevant to your hiring process, but ensure you stick with an assessment provider that uses only the most predictively valid and research-backed test designs.

You should also use such tests alongside more direct, hard-skills tests to gain a more holistic and well-rounded understanding of an applicant’s suitability for a given role.

Test result analysis is still subject to interpretation

Lastly, we must consider that tests themselves do not exist in a vacuum.

A human interprets the results of any test, meaning unconscious bias on the part of that individual could still find its way into this rather delicate portion of the testing process.

Consider the joint study from the University of California Berkeley and the University of Chicago, which found that (false) applicants with traditionally White-sounding names (like Greg and Emily) received systematic preference over applicants with traditionally Black-sounding names (such as Lakisha and Jamal), all other factors being the same.

Whether or not these employers were intentionally weeding out Black applicants (we’ll err on the side of caution and chalk this up to an unconscious bias, something all of us are prone to), this is a stark finding.

After going through a careful-designed pre-employment screening and assessment process designed to eliminate bias using culturally-sensitive test design, there can still be a systemic bias within a final selection of candidates.

To overcome such internal, unrealized biases, we recommend keeping the hiring process as anonymous as possible until the final moment a candidate is selected.

That means removing names and other personal identifiers during selection and making decisions purely on the applicant’s test results and relevant academic and professional background.

Dive deeper into hiring anonymization with our guide: How do anonymous applications fit into skills-based hiring?

Overcoming bias in the hiring process

So, can skills-based tests still be biased?

Yes, they can, but that doesn’t mean you should throw the proverbial baby out with the bath water.

It simply means that when designing tests, we must pay careful attention to how unconscious biases can creep into assessments so that we aren’t simply replicating the same issues that crop up in more subjective assessment formats like interviews.

Do that, and you’ve got a powerful tool for driving a more efficient and fairer hiring process.

Myth busted.

Speaking of myths, get the low-down on other recruitment myths here: 5 myths about skills-based hiring busted.

If skill-based tests have piqued your curiosity, you can use our extensive test library to get a better feel for them.

Sources

Dastin, Jeffrey. (October 10, 2018). “Amazon scraps secret AI recruiting tool that showed bias against women”. Reuters. Retrieved May 28, 2023.

Minton-Eversole, Theresa. (December 1, 2010). “Avoiding Bias in Pre-Employment Testing”. SHRM. Retrieved May 28, 2023.

Roth, Philip L. et al. (September 30, 2013). “Differential validity for cognitive ability tests in employment and educational settings: not much more than range restriction?”. National Library of Medicine. Retrieved May 29, 2023

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.