Understanding construct validity: Examples and FAQ

Use skills tests with excellent construct validity

Skills tests are an excellent way to measure candidates’ skills and traits before hiring them. However, you need to ensure that the tools you use are accurate. Construct validity is one type of validity that helps you determine if your tests are measuring what you intend them to measure.

In this article, we examine the construct validity definition, explore construct validity examples, discuss how TestGorilla evaluates the construct validity of our tests, and explain the importance of using valid tests.

Table of contents

- What is a construct?

- What is construct validity?

- Evidence of construct validity: Convergent, discriminant, and factorial validity

- Threats to construct validity

- How to measure construct validity

- At TestGorilla, we evaluate the construct validity of our tests

- Avoid bad hires with valid skills tests

- Construct validity FAQs

What is a construct?

A construct is an abstract concept or quality a specific test aims to measure, such as cognitive ability or leadership ability. It represents complex characteristics you can’t directly observe but can assess through well-designed tests.

For example, think of a theoretical construct as a brand reputation. You can’t see reputation directly but can measure it through customer reviews, social media interactions, and feedback surveys.

Similarly, you can’t directly observe leadership ability but can evaluate it through specific questions and scenarios in an assessment.

What is construct validity?

Construct validity is the extent to which a test accurately measures the construct it aims to evaluate. This psychometric property ensures that the test results reflect the assessed construct, making the test meaningful for hiring decisions.

Just like a scale should accurately measure your weight, tests should accurately evaluate the specific concept they’re designed to, like leadership ability.

Considering that 82% of companies use some form of pre-employment testing in their hiring process, these assessments must be accurate.

Construct validity is one of the four types of validity that TestGorilla considers when developing our tests, alongside face validity, criterion validity, and content validity.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

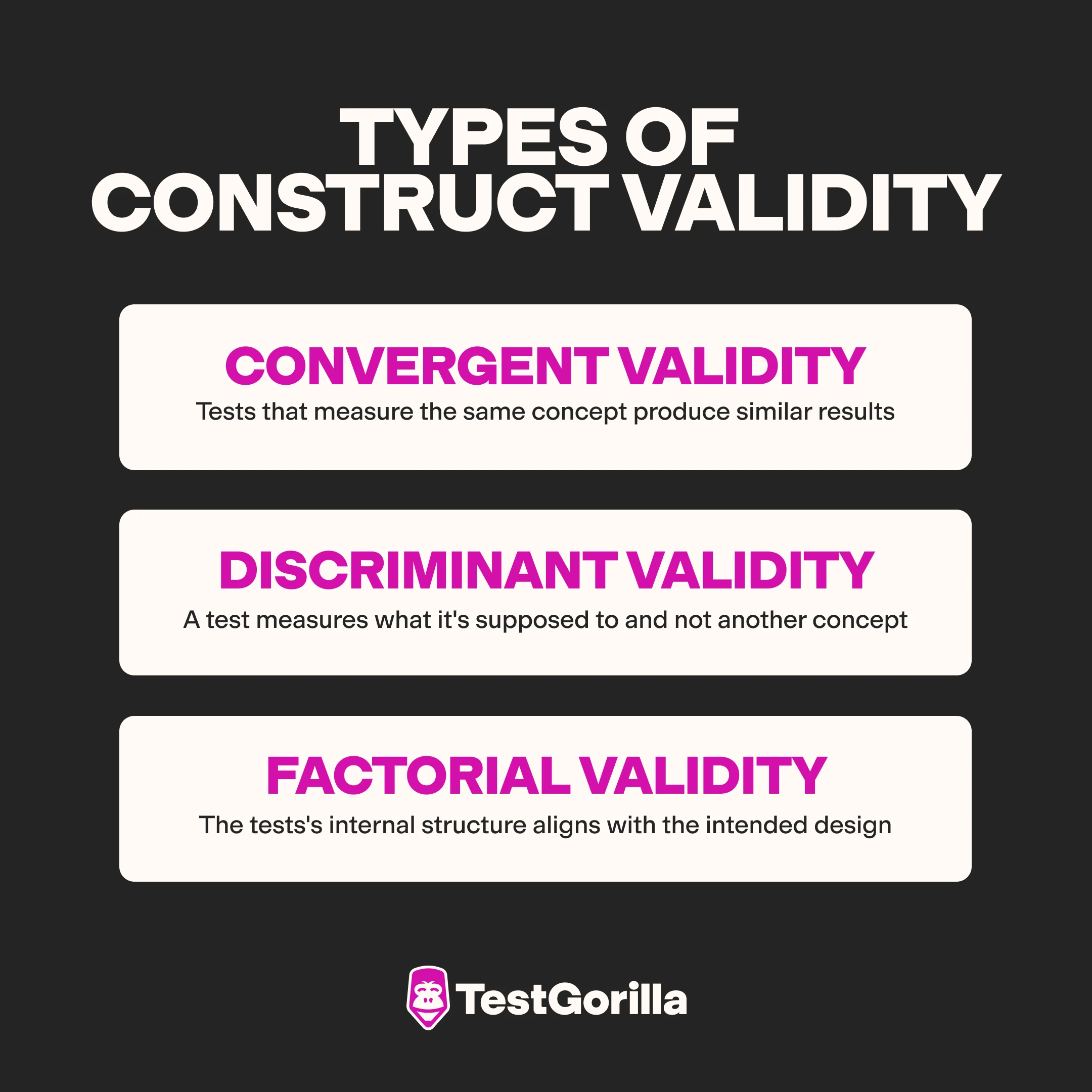

Evidence of construct validity: Convergent, discriminant, and factorial validity

While there are many ways to assess construct validity, the most relevant for a skills tests are convergent validity, discriminant validity, and factorial validity. If established, these provide evidence that your test demonstrates construct validity. Understanding these types is crucial for designing, selecting, or evaluating talent assessments.

Let’s discuss these three facets of construct validity further.

1. Convergent validity

Convergent validity refers to the degree to which a test correlates with other tests of the same construct. In other words, if different tests designed to measure the same concept produce similar results, they have good convergent validity.

Think of it as weighing yourself on two different scales. If they're working correctly, they should show you the same weight.

Convergent validity is important because it ensures that your assessment is consistent with other proven measures, which is crucial for making sound hiring decisions.

2. Discriminant validity

Discriminant validity refers to the degree to which a test doesn’t correlate with measures of different, unrelated constructs. Essentially, it shows that your test evaluates what it should and not something else.

For example, a leadership assessment should measure leadership abilities rather than something unrelated, like self-esteem.

Having a test with good discriminant validity prevents overlap with unrelated qualities, improves the precision of your hiring decisions.

Keep in mind that some tests can have good convergent validity but poor discriminant validity, and vice versa.

3. Factorial validity

Factorial validity refers to the degree to which the structure of a test matches the intended structure. Essentially, it ensures that the test items group together as intended, reflecting the underlying construct accurately.

For example, if you have a leadership test measuring the different aspects of communication, decision-making, and motivation, assessing factorial validity involves checking that the questions related to those topics all align correctly within their respective categories.

Ensuring factorial validity means you can confidently evaluate the specific aspects of your candidate’s abilities as intended.

Threats to construct validity

According to research, validity is the most important consideration when developing or evaluating test methodologies.

Certain threats to construct validity can undermine a test’s effectiveness, leading to unreliable results. That’s why it’s important to understand these threats and how they affect talent assessments.

Threat | Explanation | Example |

Construct underrepresentation | Occurs when the test doesn’t fully capture all aspects of the intended construct, leading to incomplete assessment | A leadership test that only measures decision-making skills but ignores other important abilities like effective communication |

Construct irrelevant variance | Happens when separate but related constructs influence the test results, causing inaccurate measurement of the intended construct | A math test influenced by the candidate’s reading ability or test anxiety |

Confounding variables | Variables that unintentionally but systematically affect the results, making it difficult to determine if the construct is measured correctly | Taking a time management test in a noisy test environment, which influences the outcome |

Test bias | When the test unfairly gives an advantage or disadvantage to certain groups, leading to results that don’t reflect the construct | A cognitive ability test that is biased against non-native English speakers, resulting in lower test scores |

Response bias | Occurs when respondents make inferences in a way they think is expected or socially desirable rather than truthful | A personality test where candidates pick the answer more likely to get them the job, like claiming to enjoy micromanaging |

Try TestGorilla for valid tests you can trust

We’ve already done the heavy lifting of designing, testing, refining, and optimizing our tests, meaning you only have to worry about building the perfect assessment. Find out more today.

How to measure construct validity

If you want to ensure your talent assessments accurately measure your construct of interest, you need to measure their construct validity. Here are a few ways to measure the construct validity of your skills tests.

1. Define the term you aim to measure

Different people can interpret the construct you want to evaluate in various ways. Therefore, it’s crucial to define a new measure or construct clearly to confirm that you and the test provider share the same understanding.

A precise construct definition helps avoid ambiguity and ensures the assessment focuses on the specific traits or skills you want to evaluate.

Here’s how to define the concept you want to measure:

Consult subject matter experts: Gather insights from experts to ensure your definition is comprehensive and accurate.

Review existing research: Look at validated assessments and research to understand how similar constructs are defined and measured.

Identify specific traits: List the specific traits, behaviors, or skills that make up the construct.

Avoid vague terms: Ensure the definition is clear and easy to understand for everyone.

Align with objectives: Confirm the construct aligns with the specific needs and objectives of your organization.

Test your definition: Use a small group to check for understanding and refine it based on feedback.

2. Provide evidence that your test evaluates what you intend it to

The next step is to provide evidence of why your questions relate to the concept you defined and discuss why you believe the test takers’ answers represent their capabilities in a specific area.

For example, if you want to evaluate a candidate’s communication skills, you should clearly define what communication skills are and explain how the test’s questions and potential answers are designed to measure them.

Without this evidence, your assessment’s results could be inaccurate and ineffective for making informed decisions.

Here are a few actionable tips on how to provide evidence that your test evaluates what you intend it to:

Link questions to constructs: Ensure each question is explicitly linked to a specific aspect of the construct you aim to measure.

Conduct construct validation studies: Compare the test’s scores with evaluations by subject-matter experts to verify accuracy. For example, you can compare scores on a communication skills test with subject-matter expert’s ratings of someone’s communication skills.

Perform pilot studies: Use a small group of participants to gather initial data, which can help you refine the questions.

Refine questions with data: Analyze pilot study data to help uncover errors, poor items, or other issues that may have been overlooked in the initial development phase.

Involve subject-matter experts: Have experts review the questions and provide feedback on their relevance.

3. Provide evidence that your test relates to other similar tests

To ensure the validity of your skills test, you must demonstrate its effectiveness by comparing it with other established tests. This step is crucial for confirming its wider applicability and effectiveness.

For example, if you are assessing communication abilities, you might define these skills as how well a person uses verbal and written communication to support productivity and effectiveness in the workplace.

Here’s how to provide evidence that your test relates to other similar tests:

Identify similar tests: Find established tests that evaluate similar constructs, such as verbal and written communication skills in the workplace. .

Compare results: Analyze the trends in your data analysis and check for similarities with the other tests. Look for high positive correlations in the results to establish convergent validity.

Conduct additional validation studies: Administer your test and an established test to the same group of participants. Consistent results across both tests provide additional evidence of construct validity.

4. Deploy and refine your test

Regular testing and refinement help maintain the quality of your tests, ensuring it provides sound results over time. This ongoing process helps you quickly identify and address any issues that may affect your test’s validity.

Here are some ways to refine your tests:

Gather feedback: Ask subject-matter experts to provide feedback to understand where the test falls short.

Use statistical methods: Evaluate the test’s validity through statistical analysis.

Refine test items: Based on data analysis and feedback, refine the test items to improve clarity, relevance, and design.

Remove or revise questions: Eliminate or revise any questions that don’t contribute to measuring the construct.

Repeat the process: Continuously test and refine the assessment.

Document changes: Keep detailed records of all changes made during the refinement process to inform future adjustments.

At TestGorilla, we evaluate the construct validity of our tests

Valid talent assessments help you identify the best candidates based on their skills and potential rather than relying solely on resumes. This approach benefits you and the candidate, leading to better-quality hires and higher performance.

In fact, 94% of employers using talent assessments and skills-based hiring believe this approach is more effective at identifying talent than using resumes.

Why is that?

Skills-based hiring offers several advantages over traditional resume screening:

Objective evaluation: Talent assessments provide objective data on candidates’ skills, which reduces biases that can occur during resume reviews

Better person-organization fit: By focusing on actual skills and traits, you confirm candidates are well-suited for the job, which leads to higher satisfaction and reduced turnover

Improved efficiency: Automated assessments streamline the hiring process, saving time and resources and securing high-quality hires

Take a look at Click&Boat, for example. This boat-renting platform turned to TestGorilla to hire higher-quality customer support agents more quickly. The role requires a unique mix of cognitive thinking, technical abilities, and soft skills.

Thanks to our talent assessments with high construct validity, the company easily recruited top-quality candidates. To make things even better, employees hired with our skills tests are 30% more productive than the rest of the team.

How do we ensure construct validity at TestGorilla?

But how do we ensure our tests are valid? Each test in our library is assessed through a multistep process that includes rigorous evaluations by subject-matter experts and evidence from test data.

We keep construct validity in mind from the beginning of our test development process, when our Assessment team works collaboratively with experienced subject matter experts to clearly define the skills, knowledge, or competencies that a test measures. Then, these tests are peer-reviewed before publication.

Later on, we collect data from a diverse sample of individuals who take the test, and our team of organizational psychologists, psychometricians, and data scientists conduct statistical analyses to examine the quality of our tests.

From this data, we establish both convergent and discriminant validity by looking at patterns of correlations between our tests to understand them. Correlations range from -1 to 1, with numbers closer to 1 indicating a strong positive correlation, and numbers closer to 0 indicating weak correlations.

Let’s take a look at our Communication test, which demonstrates strong correlations with related tests like English B1 (r = .66), Understanding Instructions (r = .47), and Verbal Reasoning (r = .47), indicating convergent validity. In contrast, the Communication test shows weaker correlations with unrelated skills like HTML5 (r = .24), Basic Double-Digit Math (r = .23), and Business Judgment (r = .22), demonstrating discriminant validity.

TestGorilla’s team of Intellectual Property Development Specialists, psychometricians, and data scientists meticulously evaluate and report this validity evidence in test fact sheets.

Discover top talent with talent assessments

Unlock the power of valid and reliable talent assessments to find candidates better and faster. Sign up for our free plan and start planning the perfect assessment.

Avoid bad hires with valid skills tests

Establishing the construct validity and reliability of your skills tests can help you avoid bad hires and have less bias in your recruitment process.

The best approach is to search for credible talent discovery platforms like TestGorilla, which offers hundreds of tests with high validity. We do all the heavy lifting of designing, testing, refining, and optimizing tests.

All you have to do is sign up for a free forever plan and start using our talent assessments to hire better candidates.

You can also take a look at our product tour to discover how you can reinvent your recruitment process, or book a live demo to learn more about our talent assessments and how you can personalize them to best fit your hiring needs.

Construct validity FAQs

In this section, you can find answers to the most common questions about construct validity.

How do you determine if a test has strong construct validity?

Construct validity is the extent to which a test measures the concept it intends to assess. It involves gathering evidence that the test aligns with theoretical expectations and correlates with different measures of the same construct. Key approaches to confirm construct validation include expert evaluations, factor analysis, structural equation modeling, and correlational studies with established tests. Consistent evidence across these formats supports strong construct validity.

What is face validity vs. construct validity?

Face validity and construct validity are two different methods for evaluating a test. Face validity assesses the extent to which a test appears reasonable and appropriate to those who take it, essentially determining if the test seems to measure what it claims at a surface level. It’s like judging a book by its cover.

Construct validity, on the other hand, involves a more in-depth look to see if the test truly measures what it claims to. It’s like reading the book and verifying that the content aligns with the title.

What is construct validity vs. content validity?

Construct validity measures how well a test reflects the theoretical concept it aims to assess, while content validity evaluates whether the test comprehensively covers the entire concept. It focuses on the extent to which test items accurately represent the construct in all its dimensions. You might wonder whether content validity or construct validity is more important, but both are essential for creating effective assessments.

What is an example of good construct validity?

A good example of construct validity is a test that accurately measures mathematical reasoning skills, correlates highly with other established math tests, and predicts performance in math-related tasks. For example, if students who score well on this test also excel in math courses and standardized math exams, it shows strong construct validity. Additionally, if the test items group together as intended and do not correlate with unrelated constructs, such as verbal reasoning skills, it further indicates that the test accurately measures mathematical reasoning.

Science series materials are brought to you by TestGorilla’s team of assessment experts: A group of IO psychology, data science, psychometricians, and IP development specialists with a deep understanding of the science behind skills-based hiring.

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.