Understanding gender Differential Item Functioning (DIF) in test analysis

Gender bias is everywhere. At TestGorilla, we recognize that removing barriers for women within the labor market is vital if we want to create a fairer world. But what does that mean in the context of pre-employment testing, and what are we doing to ensure our tests don’t discriminate against gender?

This blog post explores the concept of Differential Item Functioning (DIF) and its role in ensuring fairness in testing. We will also discuss the results of DIF analysis of TestGorilla tests and how they can help in creating more equitable assessment methods.

Table of contents

- Gender bias persists across the labor market

- … And fair pre-employment tests are critical to combatting it

- What is Differential Item Functioning (DIF)?

- An example of an item with DIF

- What are the possible sources of DIF?

- How do we approach gender DIF at TestGorilla?

- The results of our most recent gender DIF analysis

- Workplace equality starts with fair hiring practices

Gender bias persists across the labor market

Gender bias remains pervasive in various domains, including employment and testing. Despite recent progress, such as a narrowing gender wage gap and an increase in the share of women on boards of the largest publicly listed companies, significant disparities persist, with women facing barriers in the labor market.

The comprehensive study "Women in the Workplace" by McKinsey & Company and LeanIn.org reveals that over the past nine years, women – especially women of color – remain underrepresented across the corporate pipeline.

… And fair pre-employment tests are critical to combatting it

These disparities are both social and economic concerns. According to the World Bank, GDP per capita would be almost 20% higher if all gender employment gaps were closed.

Addressing these issues is crucial for social justice and economic growth. One critical step in combating gender bias in employment is through fair and unbiased pre-employment assessments.

Even though we cannot entirely eliminate the disparities in test-taking populations rooted in long-standing systemic inequality (Bohren, Smith, & Johnson, 2022), we have the ability and responsibility to use statistical and psychometric techniques to identify and remove problematic test items. One effective tool for this purpose is Differential Item Functioning (DIF) analysis.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

What is Differential Item Functioning (DIF)?

Fairness in testing is a complex concept, encompassing both social and technical dimensions. While fairness is a broad social concept, bias is a specific technical issue in psychometric testing. For a test to be fair, it must be free from bias, meaning it should not systematically disadvantage any particular group.

To achieve such fairness in testing, it is crucial to ensure that every test item accurately and equitably measures the intended construct across all groups of test-takers. This is where Differential Item Functioning (DIF), a sophisticated statistical technique, becomes essential. DIF is employed to rigorously evaluate whether individual test items function differently for distinct groups, even when those groups possess equivalent levels of the ability being measured.

To better understand DIF, consider the following analogy. Imagine two individuals, a man and a woman, who are exactly the same height. If a ruler is used to measure their heights, the expectation is that the ruler will yield the same measurement for both. However, if the ruler indicates that the man is taller than the woman, despite their identical height, this would suggest a bias in the measurement tool. Similarly, in testing, DIF analysis examines whether a particular question (analogous to the ruler) unfairly favors one group over another, even when both groups exhibit the same ability level.

DIF analysis is a sophisticated and reliable method for identifying biased test items. Rather than simply removing questions that show large differences in average scores between groups, DIF analysis compares how different groups (such as a male and a female) perform on each test item. It does this while taking into account the overall test performance to ensure any observed differences are truly due to bias and not just varying skill levels. DIF analysis has been effectively used to detect biased items and is recommended for high-stakes assessments (Martinková et al., 2017).

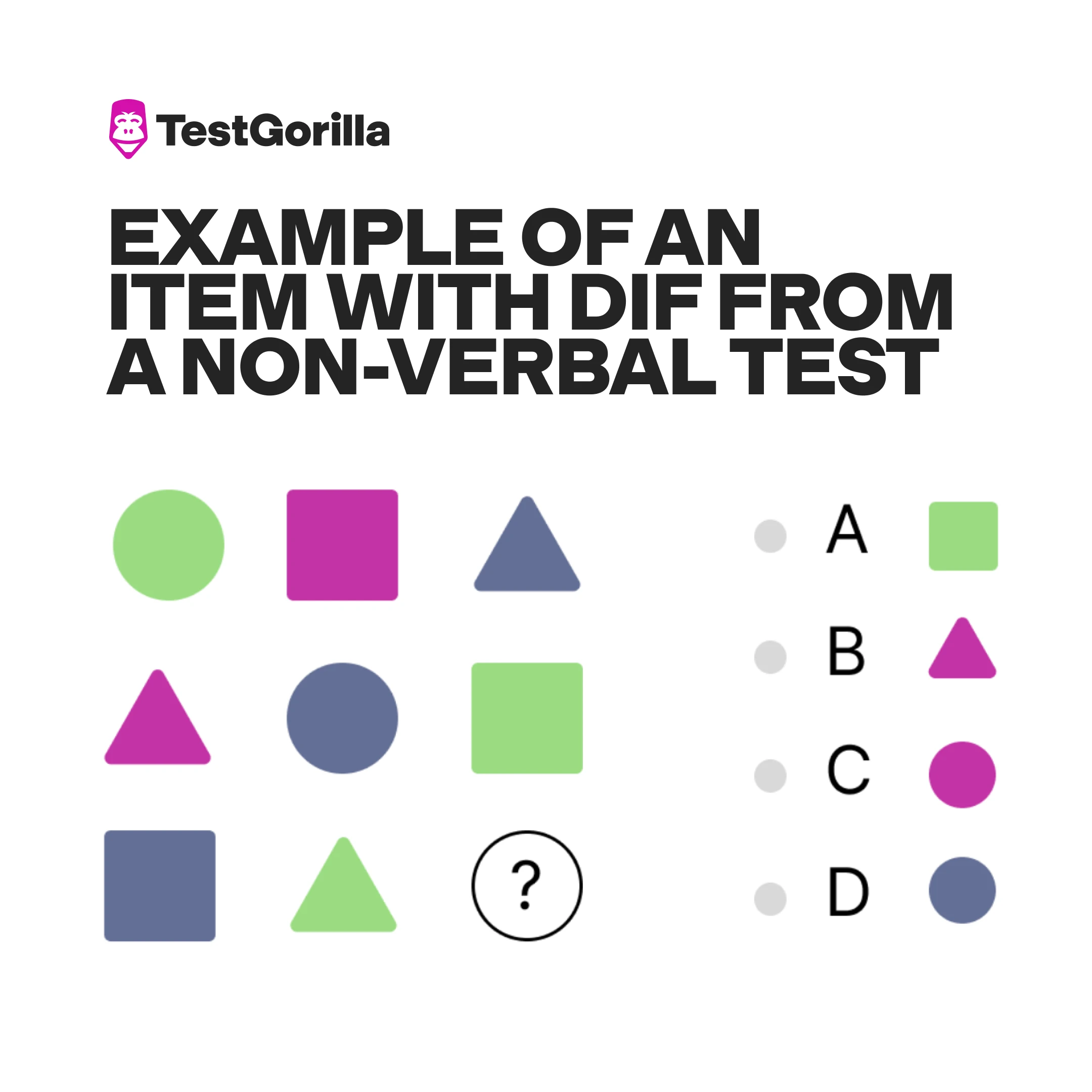

An example of an item with DIF

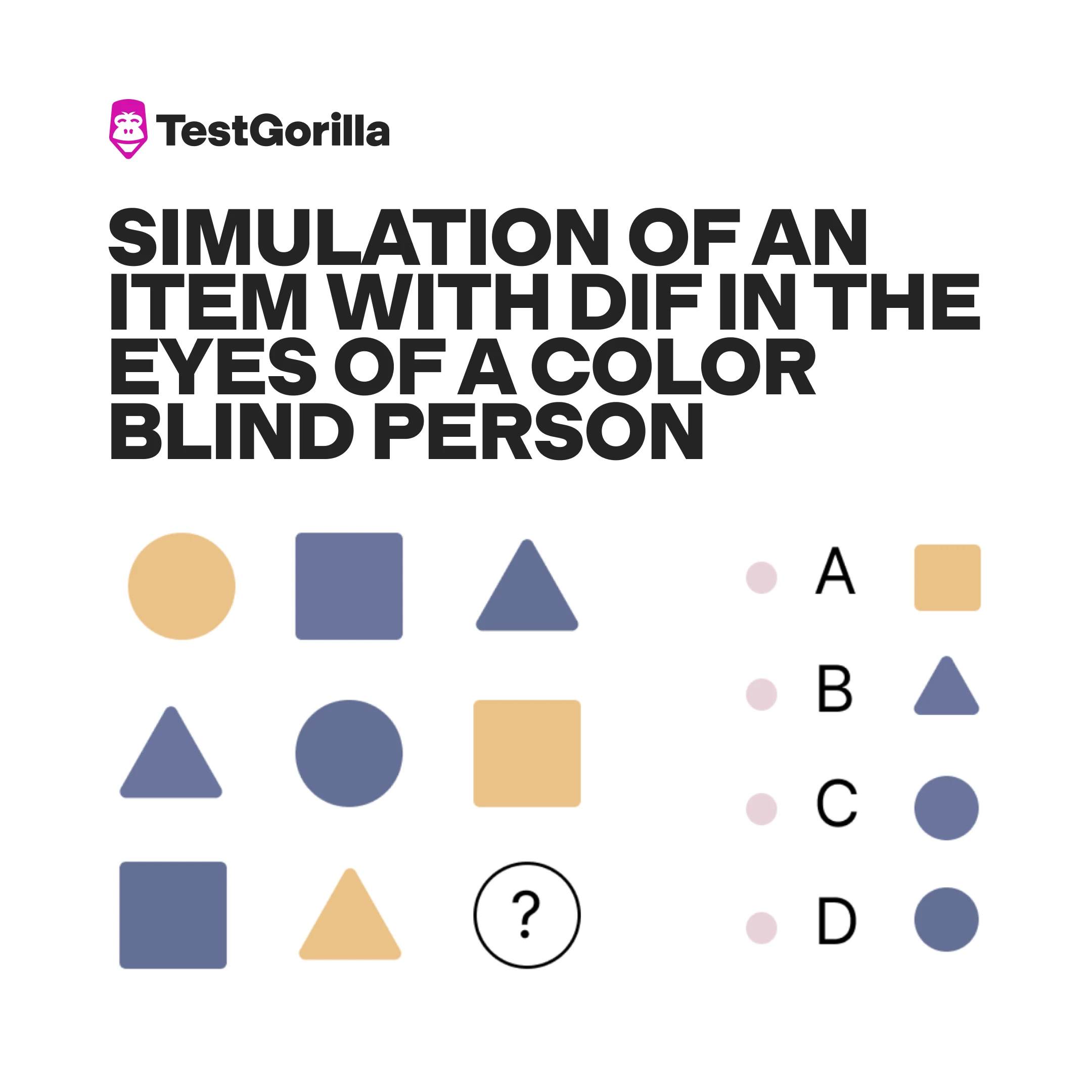

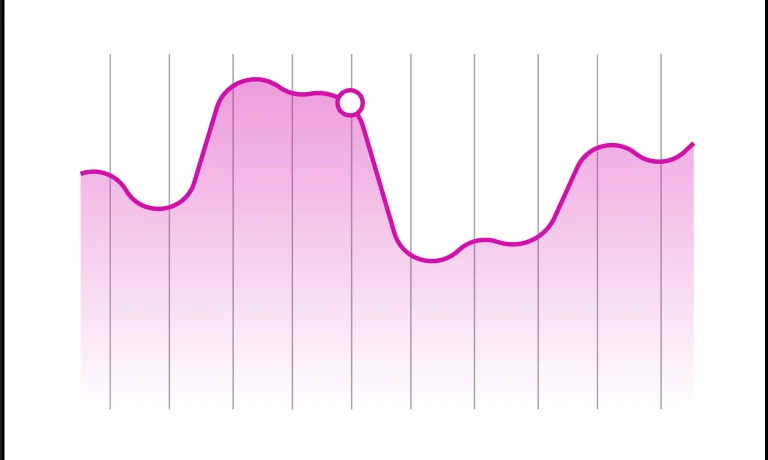

Below is a simple example of an item from a non-verbal test. The participant needs to find a sequence and choose the correct figure (pink circle). However, this item could be a potential source of gender DIF.

This item might be unfair to males because approximately 1 in 12 males and only 1 in 200 females are colorblind. Color blindness affects roughly 8% of Caucasian males. One common type of color blindness makes it difficult to distinguish between certain shades of red and green. Below is a simulation of how a colorblind person might see this item from the test, showing the challenge of the difference between the pink and blue figures.

What are the possible sources of DIF?

Identifying the sources of gender-based Differential Item Functioning (DIF) is crucial for developing fair and unbiased tests. Various factors can contribute to DIF, including:

Semantic Nuances: Differences in how words or phrases are understood across genders.

Linguistic Precision: Variations in language comprehension and usage between genders.

Cognitive Load: The mental effort required to process information, which may differ by gender.

Emotional Coloring: The emotional response elicited by certain test items, which can vary between genders.

Visual Representation: Differences in how visual elements are perceived and interpreted.

Minuscule Details: Any small, seemingly insignificant details that might affect performance differently across genders.

How do we approach gender DIF at TestGorilla?

Differential Item Functioning (DIF) analysis is an indispensable tool for developing and maintaining fair assessments. It allows us to detect and rectify biases, ensuring that our tests are valid and equitable. At TestGorilla, we recognize that while DIF analysis is crucial, it is only one part of a comprehensive strategy to minimize demographic differences in assessments. We implement a multifaceted approach to create assessments that provide accurate and fair evaluations for all candidates, regardless of their background.

Here’s what we’re doing at TestGorilla to ensure fairness:

Prevent bias during test development

To prevent building biased tests, we do the following things during the test creation process:

Expert Assessment Creators: Select test creators with relevant experience and expertise.

Comprehensive Training: Train test writers thoroughly to ensure they create inclusive questions and avoid biased language.

Inclusive Language: Use inclusive language and avoid culturally specific terms. Employ a name bank with multicultural, gender-neutral names during test creation.

Peer Review: Ensure assessments undergo review all content for inclusivity and potential bias before being used by test development experts from TestGorilla and Subject Matter Experts.

Conduct ongoing assessment and review of tests

After our tests are published and are being used, we continuously collect and analyze data to review them for potential bias.

DIF Analysis: Conduct Differential Item Functioning (DIF) analysis during the development phase and regularly after the test is released to candidates.

Continuous Monitoring: Regularly monitor and update tests to maintain fairness and relevance.

Upcoming Initiatives: We are in the planning stages to conduct DIF analyses on other protected groups.

By combining statistical analysis with expert review, TestGorilla ensures that our assessments are robust, equitable, and capable of providing fair evaluations for all candidates. This multifaceted approach not only meets industry standards but sets a benchmark for fairness and inclusivity in testing.

The results of our most recent gender DIF analysis

And with that, it feels right to share some data from our most recent DIF analysis.

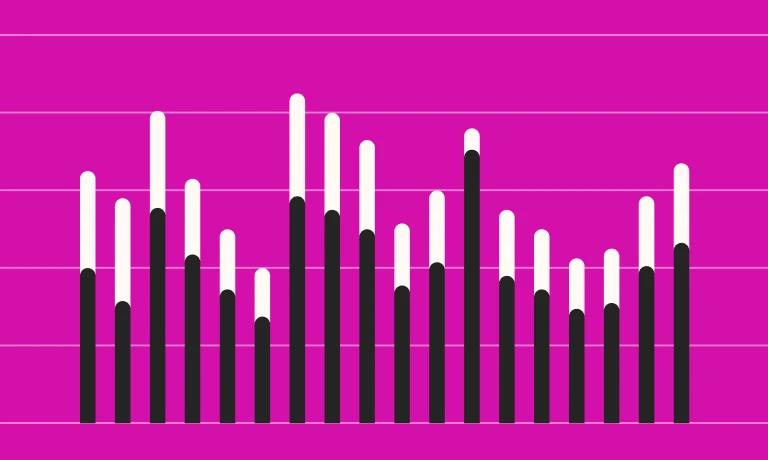

Our team conducted a thorough DIF analysis on 6,886 items from over 100 skill-based tests, focusing on gender differences (self-identified as male vs. female). Our study revealed that only 0.006% of the items were flagged with DIF (i.e., they had a large effect size), leading to their exclusion from our item bank. Among the flagged items, 54% exhibited a positive DIF (advantaging males), while 46% favored females.

Here are the key statistics from our analysis:

Number of analyzed tests: 119

Number of items analyzed for DIF: 6,886

Items flagged with DIF: 0.006% (42) items

What comes next if an item is flagged?

All flagged items are examined by Subject Matter Experts and test development experts from TestGorilla to identify any patterns and prevent them from occuring again in future assessments.

Workplace equality starts with fair hiring practices

If you’ve made it this far, hopefully you have a better understanding of DIF and how we use it in our test analysis at TestGorilla. For more reading on similar topics, visit our science series or check out these blogs:

How skills-based hiring can help close the gender application gap

Pink collar jobs: How to break down gender barriers and attract diverse candidates

Science series materials are brought to you by TestGorilla’s team of assessment experts: A group of IO psychology, data science, psychometricians, and IP development specialists with a deep understanding of the science behind skills-based hiring.

Sources

Martinková, P., Drabinová, A., Liaw, Y.-L., Sanders, E. A., McFarland, J. L., and Price, R. M. (2017). Checking Equity: Why Differential Item Functioning Analysis Should Be a Routine Part of Developing Conceptual Assessments. CBE—Life Sciences Education, 16(2), rm2.

Bohren, J. A., Hull, P., & Imas, A. (2022). Systemic discrimination: Theory and measurement (No. w29820). National Bureau of Economic Research.

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.