How to interpret test fact sheets, Part 1: Reliability

Establishing the reliability and validity of assessments is a complex process, involving the meticulous and ongoing examination of various pieces of evidence. Several psychometric measures contribute to this evidence, including reliability, content validity, construct validity, and criterion validity.

Since our goal is to provide you with reliable and valid assessments, we've developed Test Fact Sheets (find examples here of Test Fact Sheets for our Communication test, English B1 test, and Attention to Detail test) that offer a brief overview of the key psychometric properties of individual tests and assessments in our extensive library.

We understand that interpreting test fact sheets can sometimes be a bit daunting, especially if you're not a psychometric expert. That's why we've put together this easy-to-understand guide to help you navigate the valuable information presented in our test fact sheets. In part 1 of this blog series, we’ll focus on how to interpret test reliability.

Interested in understanding test validity a little better? Head to part 2.

Understanding reliability

A typical test fact sheet provides essential information about a test's reliability and validity. The first metric listed on our test fact sheets is reliability. But what is reliability, and how is it measured? And how do you interpret reliability coefficients? Read on to find out.

What is reliability?

Reliability refers to the consistency and stability of test scores. In simple terms, it tells you whether the test produces consistent results with repeated applications to the same individual, over time, or parallel measures. Think of it this way: if you took a personality assessment today and then took the same assessment a month later, and your scores were very similar, then this would indicate the test is reliable for measuring your personality characteristics.

We do not expect tests to be perfectly reliable. Measuring human behavior is a complicated thing. Many factors can impact the reliability of test scores, for example:

The test taker’s psychological state at the time of taking the assessment (e.g., taking a assessment when you're feeling very stressed versus when you're relaxed)

The test taker’s physical state at the time of taking the assessment (e.g. taking a cognitive ability test when you're well-rested versus when you're fatigued)

Environmental factors (e.g. being in a noisy space while taking a test; a toddler that distracts you and needs your attention)

This list is not exhaustive, and such factors introduce sources of chance or random measurement error into the assessment process.

If there were no random errors of measurement, the individual would get the same test score – the individual's "true" score – each time (you can learn more about true scores in our brief introduction to classical test theory). The degree to which test scores are unaffected by random measurement error is an indication of the reliability of the test.

How do we measure reliability?

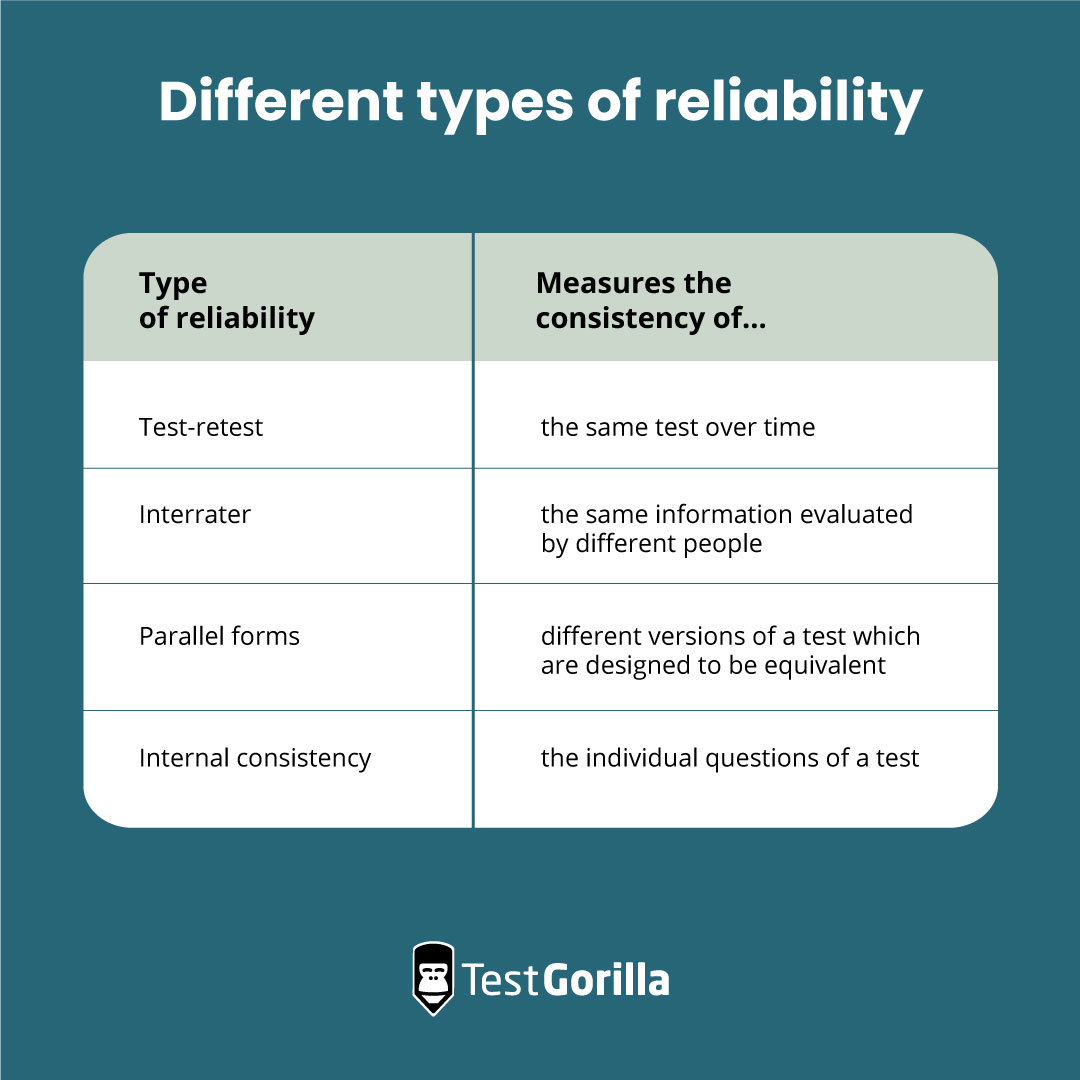

There are several different types of reliability, each assessing a specific aspect of consistency to determine how reliable a test is. These include test-retest reliability, parallel form reliability, interrater reliability, and internal consistency.

Type of reliability | Measures the consistency of… |

Test-retest | the same test over time. |

Interrater | the same information evaluated by different people. |

Parallel forms | different versions of a test which are designed to be equivalent. |

Internal consistency | the individual questions of a test. |

As per industry standards, we typically report the internal consistency, which is indicated by Cronbach's Alpha (also called coefficient alpha or α).

Cronbach’s Alpha essentially measures how consistent measurement is over a number of questions in a test. Each question is essentially a “mini-test” and people’s responses on each question should be relatively consistent across the questions in the test. We therefore expect that questions will correlate relatively highly with each other, leading to a high(er) reliability coefficient.

A practical example

Now, let’s go through a practical example. Imagine you have a test designed to measure the construct "Team Leadership Skills." The test consists of four questions in which test-takers need to rate the extent to which they agree with a statement on a 5-point scale ranging from strongly disagree to strongly agree. The four statements are:

"I am effective at delegating tasks and responsibilities to team members."

"I can inspire and motivate my team to achieve their goals."

"I am organized and can plan and coordinate team activities efficiently."

"I enjoy participating in team-building activities outside of work."

In this example, all four questions are intended to measure the same construct: "Team Leadership Skills." However, the first three questions are closely related as they directly address leadership behaviors, such as delegation, motivation, and organization, which are essential for effective team leadership. On the other hand, the fourth question, about enjoying team-building activities, is less closely related. While it measures an aspect of teamwork, it is not as tightly linked to the core leadership behaviors assessed by the other questions.

When calculating Cronbach's Alpha for this test the first three questions would likely contribute more to the internal consistency of the test, because they are more closely related to the primary construct of "Team Leadership Skills." The fourth question, though related, would not contribute as strongly to the internal consistency of the test due to its lower direct relevance to leadership behaviors.

How do you interpret reliability coefficients?

The reliability of a test is indicated by a reliability coefficient. It is denoted by the letter "r," and is expressed as a number ranging between 0 and 1.00, with r = 0 indicating no reliability, and r = 1.00 indicating perfect reliability.

The higher the value, the more stable, consistent, and free from random measurement errors the test scores are. That said, extremely high alpha values are also not desirable, as it possibly indicates there are too many questions measuring the same thing (i.e., not adding unique value to the test).

While there are some general guidelines for interpreting reliability coefficients, these should not be rigidly followed and should never be considered in isolation when evaluating the quality of a test. An acceptable reliability coefficient will vary depending on the context, type of test, the construct measured, and the reliability estimate used.

Interpreting Cronbach’s alpha

When it comes to interpreting Cronbach’s alpha, it is important to realize that this coefficient is sensitive to a number of factors, including the number of questions in a test and how strongly the questions in a test are related to each other. This has a couple of important implications when we interpret this reliability coefficient.

First, it means that a high coefficient alpha isn't in and of itself the mark of a "good" or reliable set of questions. You can often increase the coefficient simply by increasing the number of questions in the test. A short test with “good” questions could have a lower Cronbach’s alpha than a longer test with “less good” questions.

Second, it means that a test that measures a broader domain can be expected to have a lower Cronbach’s alpha than a test that measures a much narrower domain.

Consider, for example, a personality assessment that measures extraversion, which is defined as being outgoing, social, and energized by interactions with others. Say that we decide to measure extraversion with an assessment that consists of four questions in which test-takers need to rate the extent to which they agree with a statement on a 5-point scale ranging from strongly disagree to strongly agree. The four statements are:

"I am the life of the party."

"I get energized when attending parties."

"I prefer to stay home instead of going to parties."

"I mingle with a variety of people at parties."

This test would be considered highly "reliable" (i.e., having high internal consistency) due to its very narrow content domain about extraversion – all of these questions relate to how you behave at parties. These four questions would be expected to be highly correlated, and as a result the assessment would have a high Cronbach’s alpha coefficient.

Now, consider a test like our Software Engineer test which evaluates test-takers’ knowledge of basic principles and topics of software engineering, including linear data structures, non-linear data structures, algorithm analysis, and computer science fundamentals.

The content domain of the Software Engineer test is a much broader domain than the aforementioned extraversion test that focuses on behavior at parties. Due to the broader domain covered, the questions in the Software Engineer test are likely to be less correlated with one another and therefore have a lower "reliability" (i.e., internal consistency) than the extraversion test.

In short, tests that have more questions and/or cover very narrow skill areas are more likely to deliver a higher alpha value than shorter tests and/or tests measuring broader skill or knowledge domains.

A balancing act

TestGorilla’s test library consists of approximately 400 tests which are designed to be efficient, reliable and valid assessments covering a wide range of skills and knowledge domains.

Some of our tests cover broader content domains (like the Business judgment test or the Customer Service test) than others (such as the Email Skills: Microsoft Outlook test, or the Hubspot CRM test), making them inherently more diverse and thus potentially resulting in slightly lower Cronbach's Alpha values. At the same time, the breadth of our tests allows us to provide you with valuable insights into various aspects of candidates' job-related skills and knowledge.

To learn more about job relatedness, check out: What is predictor-criterion congruence? and Legal risk and skills-based hiring.

Our team of assessment development specialists and psychometricians collaborate closely to ensure the skills and knowledge necessary for a particular topic are well-represented by the test and the test questions, that these questions are reliable and valid, and that an adequate balance is maintained between the breadth of the test content (i.e., how closely related questions are) and the psychometric soundness thereof.

This is where content and construct validity come into play (see Part 2 of this blog series for more information about validity) and why it’s important not to judge the quality of a test on its reliability coefficient alone.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

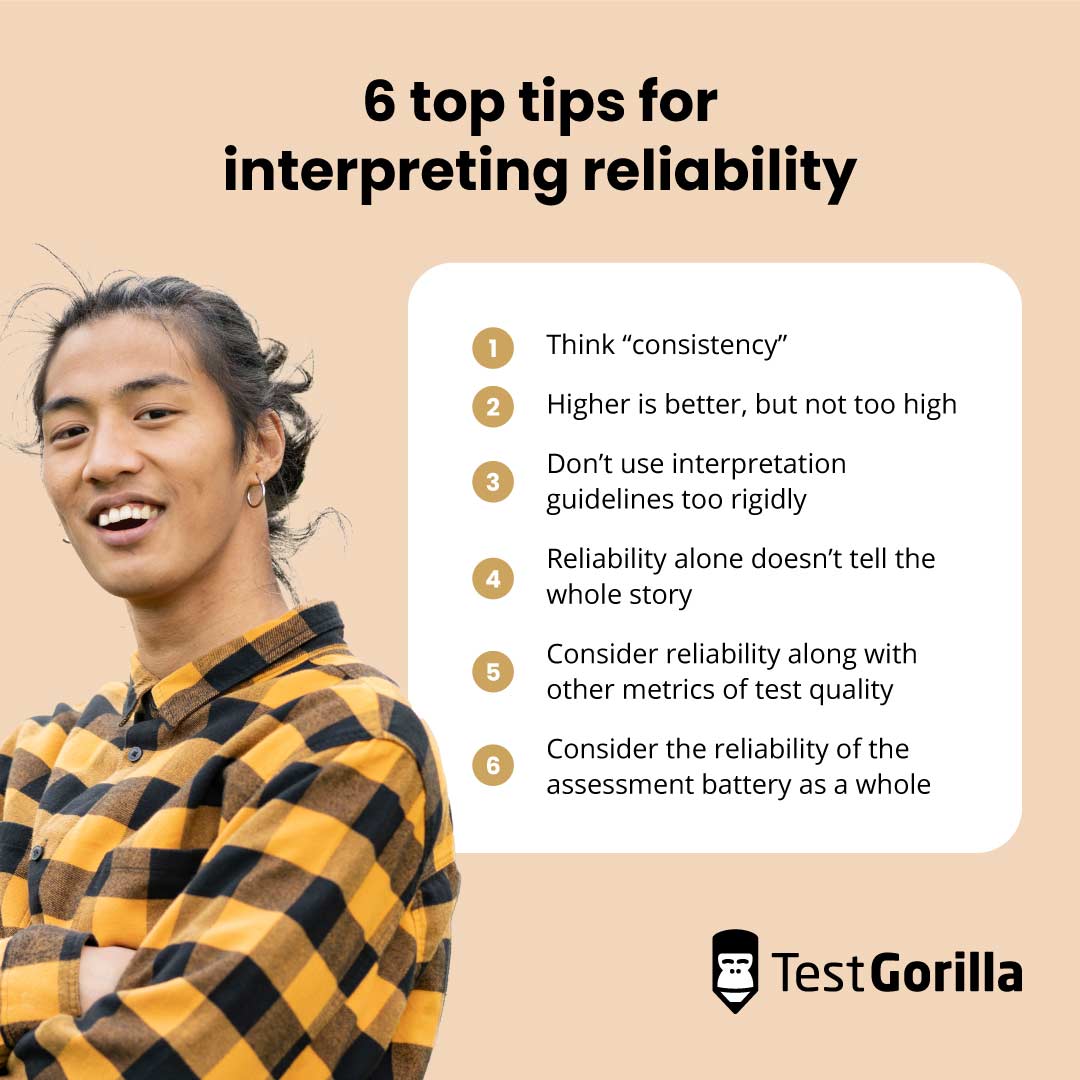

6 top tips for interpreting reliability

To help you interpret the reliability metrics on TestGorilla’s Test Fact Sheets, we’ve put together six top tips.

1. Think “consistency”

Think of reliability as a measure of consistency. If a test has a high reliability coefficient, it suggests that when you repeat the test, you're likely to get similar results.

2. Higher is better, but not too high

In general, a higher reliability coefficient is a good sign. It usually means the test is more consistent and stable. However, extremely high values can sometimes indicate redundancy among test questions, so there's a balance to strike.

3. Don’t use interpretation guidelines too rigidly

It’s useful to consider general guidelines for interpreting reliability coefficients, but one also needs to consider the context in which the test is used, the type of test, the construct measured, and the reliability estimate used.

4. Reliability alone doesn’t tell the whole story

One needs more than a reliability coefficient to fully assess how "good" a test is at measuring something. A test may have lower reliability if it measures a broad range of skills or knowledge or it aims to be an economic measure of a particular skill or characteristic. In such cases, other validity types become even more critical. Therefore, assessments should not be selected or rejected solely based on the magnitude of its reliability coefficient.

5. Consider reliability along with other metrics of test quality

Reliability is just one piece of the psychometric puzzle. Other factors like content validity, construct validity, and criterion-related validity should also be examined to get the complete picture of a test's quality. We’ll dive deeper into these in parts 2 and 3 of ‘How to interpret test fact sheets.’

6. Consider the reliability of the assessment battery as a whole

Most decision-makers use a combination of tests to evaluate candidates’ suitability for a role and often look at the overall assessment score to make the hiring decision (i.e., a total, average, or weighted score that is calculated based on the scores of individual tests). In this case, the reliability of the overall assessment score is more important and a more representative metric of the hiring process’ reliability than any single test score’s reliability. A further advantage is that the reliability of the overall assessment score is higher than the reliability of the scores from the separate individual tests.

Next up: How to interpret the validity metrics on our test fact sheets

Understanding the reliability of pre-employment assessments is a crucial step in making informed hiring decisions – but, while reliability coefficients offer valuable insights, it's essential not to rely on them alone.

Read Part 2 of our "How to interpret Test Fact Sheets" series, where we dive into how you can interpret the validity metrics reported in our Test Fact Sheets to get a comprehensive understanding of our assessments.

Science series materials are brought to you by TestGorilla’s team of assessment experts: A group of IO psychology, data science, psychometricians, and IP development specialists with a deep understanding of the science behind skills-based hiring.

Related posts

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.