How to interpret test fact sheets, Part 2: Validity

At TestGorilla, we are committed to providing assessments that are not only reliable but also valid, ensuring that they are practical, relevant, and impactful tools for talent assessment.

In the first installment of our series on how to interpret test fact sheets, we delved into the complexities of reliability, shedding light on how it underscores the consistency and dependability of our assessments. Building on that foundation, Part 2 of this series ventures into another cornerstone of psychometric robustness: Validity.

Understanding validity is crucial to using skills-based tests in hiring, as it determines how well a test measures what it is intended to measure, making it a vital component in the effectiveness of any assessment tool. Validity consists of multiple aspects, each offering unique insights into the efficacy of our assessments.

Part 2 of our guide is designed to demystify the concept of validity, making it digestible and understandable to non-psychometric experts. We’ll look at different aspects of validity, including content validity, construct validity, and criterion-related validity. Join us as we delve deeper into the world of validity to empower you to make informed decisions when utilizing our assessments for your organizational needs.

Understanding validity

What is validity?

Validity is all about the accuracy of measurement. We want test scores to be accurate because we draw conclusions and make decisions based on test scores. In the selection context, we typically draw two types of conclusions from test scores:

Conclusions about the candidate’s skills, abilities, knowledge, or other attributes being measured

Conclusions about how the candidate will perform in the job based on their test scores.

Here’s an example. Imagine that you administer the Communication test to candidates applying for an Executive Assistant role. You want the test scores to provide an indication of how well a candidate communicates in business settings compared to others. You also want to know that the test measures what it is supposed to measure (i.e., the attribute). The test would be valid when people who communicate well in business settings receive consistently higher scores on the Communication test than people who are less adept communicators in a business setting.

On the other hand, you also want to use the Communication test scores to make an employment decision: to decide whether a person should get a job or not. You conclude that people with higher scores on the Communication test have a higher probability of succeeding in the job as Executive Assistant. The test can be said to be valid when it is useful for making accurate decisions about an individual's future success in a role.

Is validity different from reliability?

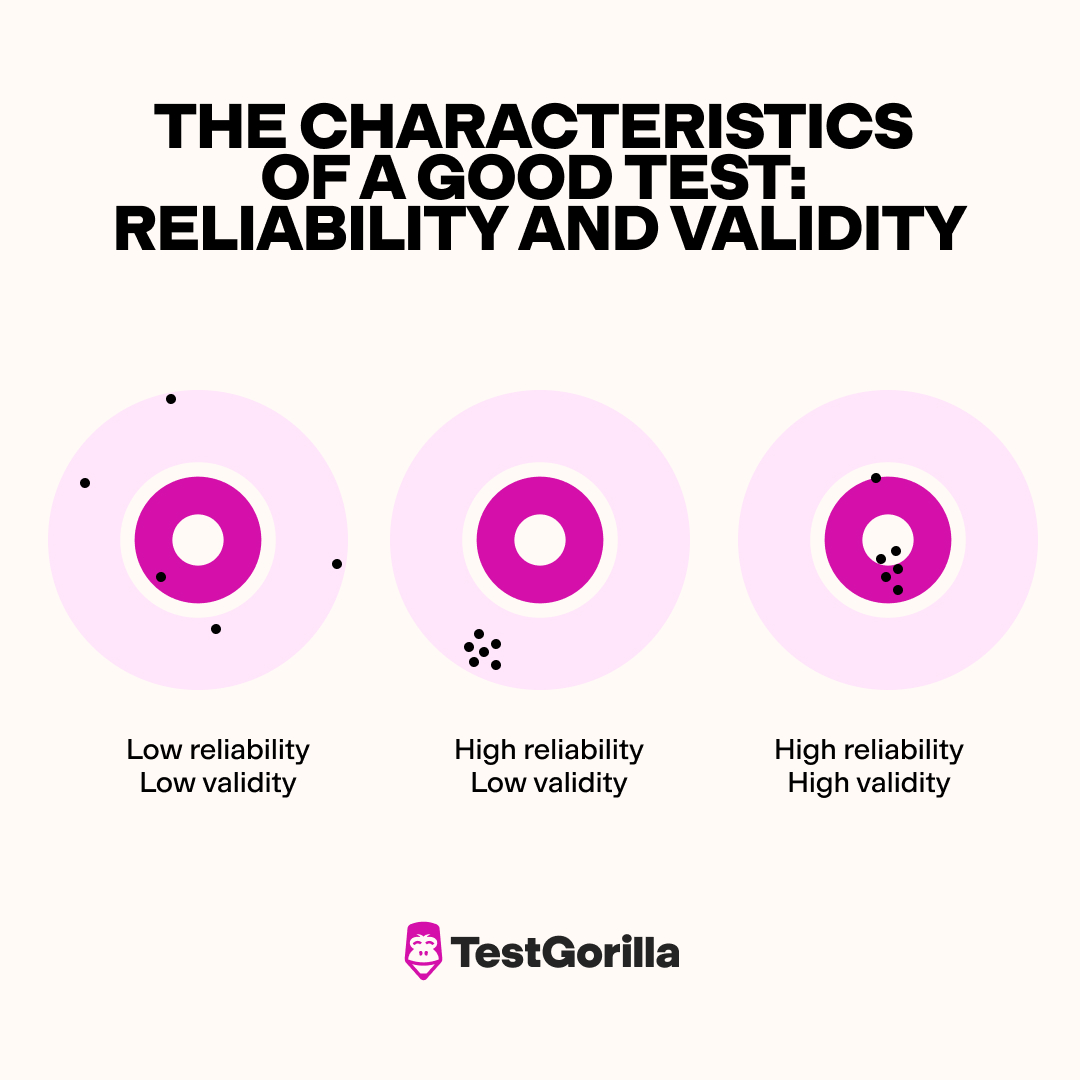

Validity is related to but distinct from reliability. While reliability refers to the consistency or stability of test scores, validity relates to the accuracy of measurement.

The picture below shows three targets illustrating different combinations of reliability and validity, with the most effective tests and assessments being those that achieve high reliability, represented by tight groupings of marks, and high validity, indicated by those groupings being centered on the bullseye.

Image 1. The characteristics of a good test: reliability and validity

Evaluating validity

How is validity assessed?

There is no golden standard that exists to establish the validity of a test. Judgments about validity are always subjective, but must be based solidly on multiple lines of empirical evidence that is accumulated.

At TestGorilla, the validity of each test in our test library is assessed through a multistep process that encompasses both rigorous evaluation and empirical data-based checks. We collect evidence from a variety of sources and we use multiple strategies to establish the validity of our tests.

The validity of test scores are meticulously evaluated by TestGorilla’s team of Assessment Development Specialists, psychometricians, and data scientists, and reported within a test fact sheet. In our Test Fact Sheets, we report on various aspects of validity, including content validity, face validity, construct validity, and criterion-related validity.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

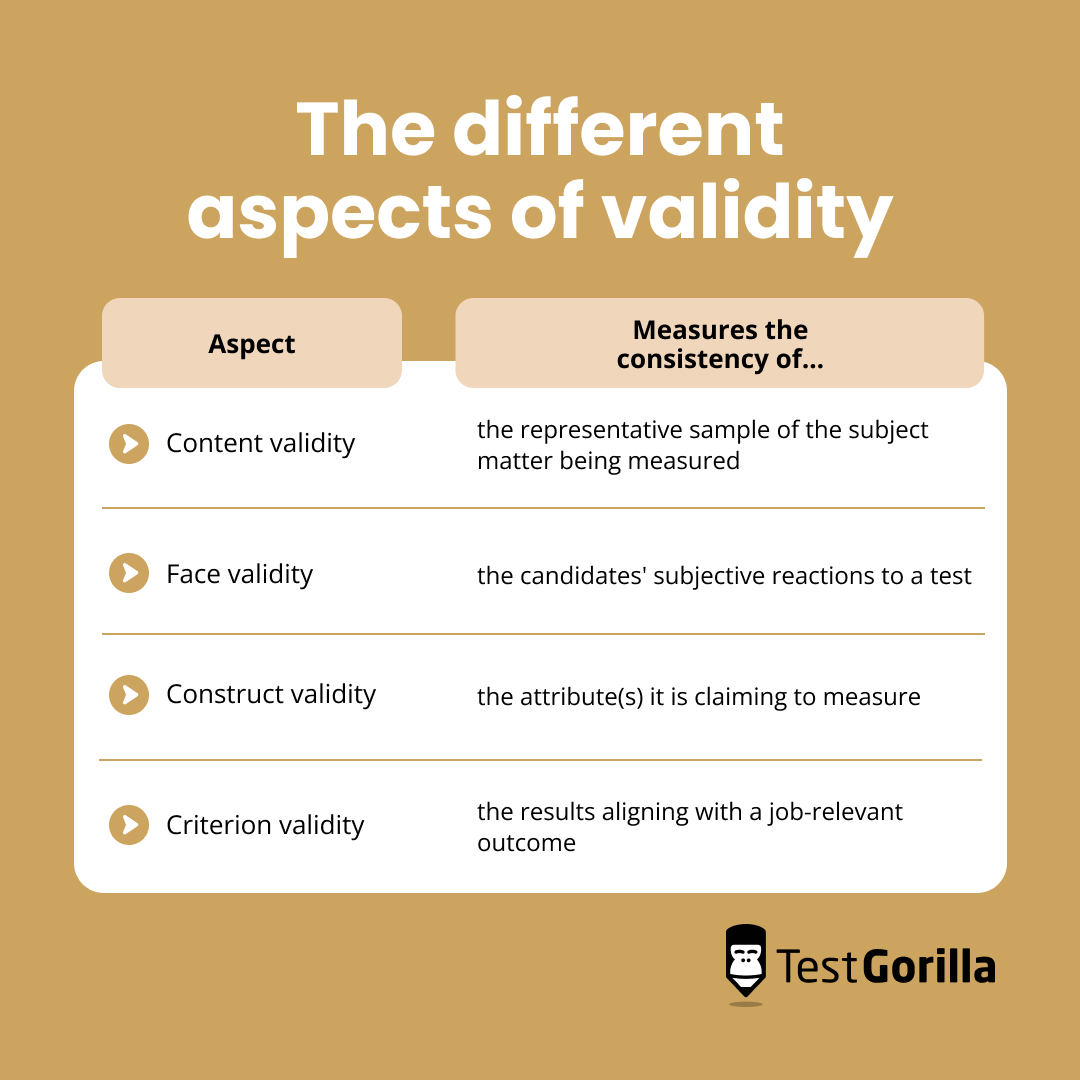

The different aspects of validity

Content validity

As the name suggests, content validity is all about the content covered in a test. It is defined as the extent to which a test covers a representative sample of the subject matter (e.g., skill, ability, personality trait, work preference) being measured.

Sometimes the subject matter being measured can be quite narrow (with, for example, a Basic triple-digit math test or Typing Speed (Lowercase Only)), while other times it can be quite broad (as with a Software engineer test or an HR Management test). But irrespective of the breadth of the content domain, a test is said to have high content validity when it is judged by subject matter experts to contain an adequate sample of the particular skill, ability, or characteristic being measured.

At TestGorilla, we ensure the content validity of our tests by adopting a rigorous multistage test development and review process, utilizing multiple subject matter experts (SMEs) to design the test and write its items. Here’s a brief overview of the process:

TestGorilla’s assessment development specialists work with SMEs to create and review a validated test structure and test questions.

After the test content has been developed, the newly developed test undergoes an additional, independent SME’s review for accuracy and technical correctness. Together with SMEs, our assessment development specialists work to ensure the test aligns with industry best practices and are valid, reliable, fair, and inclusive.

After publication, all our tests undergo a continuous monitoring and evaluation process. We closely watch our tests to ensure their content stays relevant as the subject matter evolves and changes.

Using this multistage process, we’re certain that every test that goes live in our test library covers the content necessary to evaluate people of varying proficiency in the test’s subject matter.

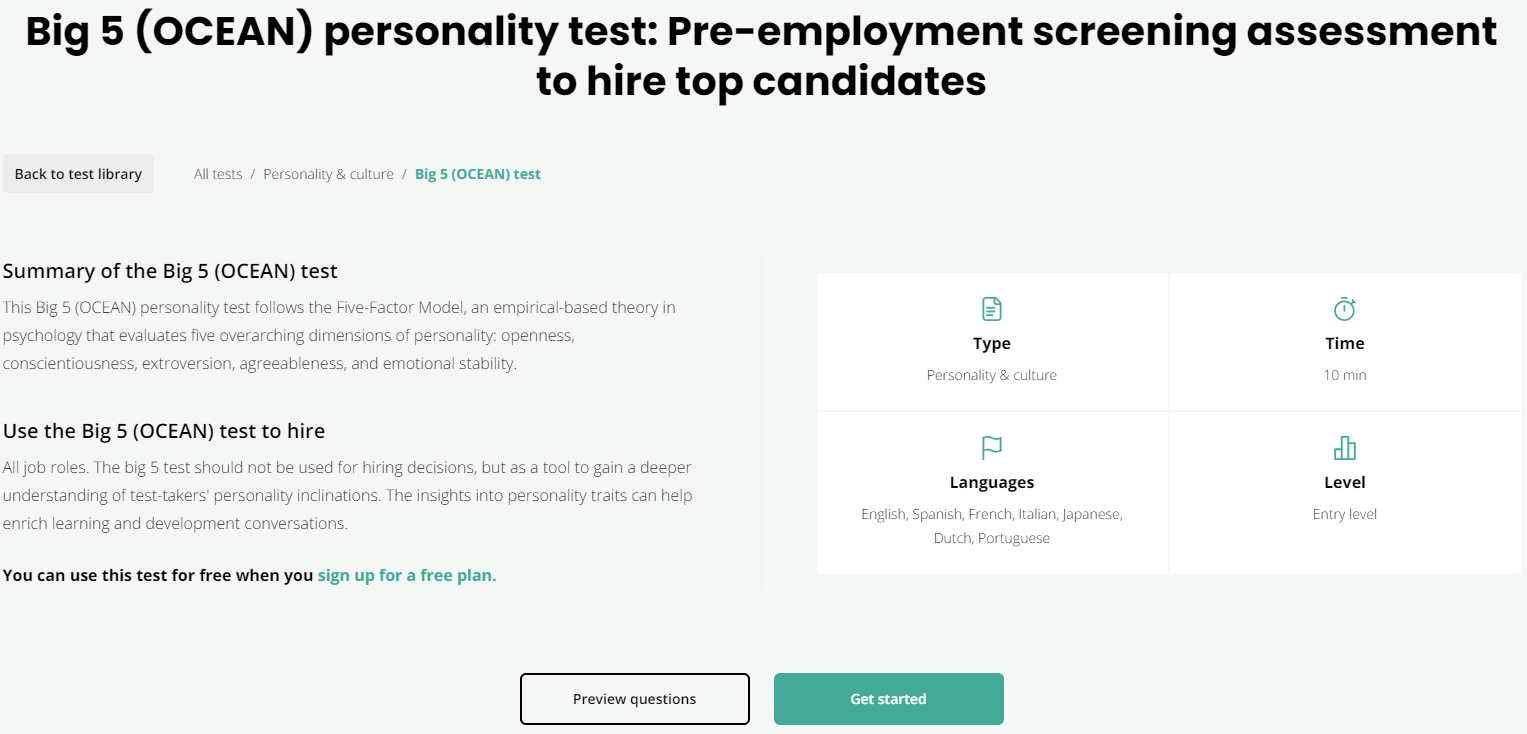

The test descriptions provided in our test library aim to give you insight into the content of a specific test and help you determine the suitability of the specific test for a particular role. We always provide you with a summary of the test, the skills covered in the test, as well as the typical roles that this test may be useful to use in the hiring process. In addition, we provide a couple of preview questions to show you how questions are presented to candidates and how their responses would be evaluated.

Image 2. Our test descriptions provide insight into the subject matter covered in each of our tests.

Face validity

Face validity refers to the extent to which a test appears reasonable to those who take it or, alternatively stated, it evaluates candidates' subjective reactions to a test. A fair, meaningful, and engaging hiring process is one that is also trusted and respected by the candidates taking part in it.

At TestGorilla, we assess face validity after each test and again at the end of the assessment. At both stages, candidates are invited to provide real-time quantitative and qualitative feedback on their test experience and are surveyed about the perceived validity and relevance of the test items they have just completed, as well as their overall experience and satisfaction in using our tests.

The image below shows an example of a face validity question that is presented to candidates after completing the Talent Acquisition test:

Image 3. After completing a test, we ask candidates to evaluate the face validity of the test.

In our test fact sheets, face validity is reported as an average score on a scale of 1 to 5. Average score above 3 indicates that candidates mostly find the test relevant and accurate in measuring their skills. Using tests with high face validity, like our Attention to detail (visual) test, could lead to increased candidate satisfaction as candidates may perceive such test tests to be an accurate evaluation of job-relevant skills, thereby resulting in greater satisfaction with the overall hiring process.

While face validity plays a crucial role in ensuring a fair and engaging hiring process, it's important to recognize that certain predictive constructs could be assessed in a more abstract or nuanced way (for example, personality traits like conscientiousness or cognitive abilities like critical thinking), potentially leading to lower face validity ratings.

These constructs, although perhaps not immediately apparent in their relevance to candidates, often demonstrate good criterion validity, meaning they are effective in predicting job performance. Therefore, while a high face validity score is desirable, it's essential to balance this with the predictive accuracy of the test, especially when measuring complex or less tangible skills that are critical to job success.

Construct validity

Construct validity refers to the extent to which the test actually measures the attribute(s) it is claiming to measure. TestGorilla has a wide library of skills tests and, depending on the specific test and test type, we gather statistical evidence to support the construct validity of our tests with evidence of convergent validity, discriminant validity, and/or factorial validity.

Convergent validity and discriminant validity are two facets of construct validity. Convergent validity refers to the extent to which a test correlates with tests that measure similar/related constructs, while discriminant validity refers to the extent to which a test correlates with tests that measure dissimilar/unrelated constructs.

These two aspects of construct validity are established by contrasting patterns of correlations between different pairs of test scores. In practice, this means we’re looking at the patterns of relationships between our tests to better understand them.

For instance, our Communication test demonstrates strong correlations with related tests like English B1 (r = .66), Understanding instructions (r = .47), and Verbal reasoning (r = .47), indicating convergent validity. In contrast, it shows weaker correlations with unrelated skills, such as HTML5 (r = .24), Basic double-digit math (r = .23), and Business judgment (r = .22), demonstrating discriminant validity.

Criterion validity

Criterion validity of a test is often hailed as one of the most important types of validity. This type of validity refers to the extent to which test results align with some job-relevant outcome measure. In the hiring context, the outcome of interest is most often some aspect of job performance.

There is a vast amount of scientific literature on the criterion validity of different tests and test types in the hiring process, and we have explored recent findings in detail in our blog series on Revisiting the validity of different hiring tools: New insights into what works best (read Part 1 and Part 2 here). Since TestGorilla is committed to rigorous empirical research on the merit and quality of its tests, we conduct both independent in-house studies and partner with our customers to deliver cutting-edge insights into the performance of our tests. You can read more about the opportunity to partner with us in this endeavor here.

In our test fact sheets, criterion validity is reported as a validity coefficient based on the relationship between test scores and hiring outcomes (e.g., ratings from hiring the team, hired/not hired). A validity coefficient is a correlation coefficient that ranges from 0 to 1 and quantifies the strength of the relationship between test scores and job performance. Higher values indicate a stronger relationship between scores on pre-employment tests and measures of job performance.

Based on the recent meta-analytic research in the field (Sackett et al., 2022), values of validity coefficients below .07 are typically seen as low, values between .07 and .15 are seen as moderate, values between .16 and .29 are seen as substantial, and values above .29 are considered high (Highhouse & Brooks, 2023). We recommend using these guidelines for the interpretation of validity coefficients, although these coefficients should always be interpreted in context and in combination with other metrics (e.g., adverse impact).

4 top tips for interpreting validity

Here are 4 top tips for interpreting the validity metrics on TestGorilla’s test fact sheets.

1. Think “big picture”

While each aspect of validity is reported separately, they’re really intended to be considered all together. A high-quality test is one that has overall good validity and reliability ratings and serves the purpose within your hiring process. It is important to always consider the basket of evidence to evaluate the quality of a test.

2. Consider multiple aspects of validity

Ensure you consider all the aspects of validity to get a comprehensive understanding of a test's effectiveness. Some aspects of validity may be better than others or lower than what you would ideally expect. In this case, it is important to understand how these evaluations are produced and what they mean for your organization and hiring process.

While every aspect of test validity should ideally be acceptable, not all need to be stellar for a test to produce satisfying results in your hiring process. For example, a test might have low face validity, but good criterion-related validity. Similarly, a test might have poorer construct validity, but great content validity. The ultimate test of any hiring process is whether it is job-relevant (i.e., predictive of performance) and non-discriminatory (see our blog on the legal defensibility of skills-based hiring).

3. Consider test relevance

Align the test's content with the job role. A test with high content validity will cover a representative sample of the skills and abilities required for the specific job position. Look at the test description provided for each test in our test library as well as the preview items, and/or ask subject matter experts at your organization (i.e., current job incumbents, the line manager that is responsible for the role) to complete a test. You can also look at our assessment templates that are pre-filled with potentially relevant tests for many roles.

Although validity is something we are focussed on, we want to emphasize that our customers are the experts when it comes to the jobs they’re hiring for. We’re here to provide you with and help you to select the best test, but, ultimately, only the employer can truly ensure job-relatedness.

4. Contextualize validity coefficients

Interpret validity coefficients in context. Remember that higher coefficients indicate a stronger relationship between test scores and job performance, but also factor in the test's potential impact on diverse candidate groups. Meta-analytic studies and local validity studies can help you to figure out what the typical and expected validity coefficients for your particular hiring context are.

These tips should guide you in effectively interpreting the validity aspects of our test fact sheets, helping you to make informed decisions when selecting assessments for your organizational needs.

To read more about how scientists determine the validity of different hiring tools, check these blog posts in our science series:

Revisiting the validity of different hiring tools: New insights into what works best

Revisiting the validity of different hiring tools: New insights into what works best – Part 2

Science series materials are brought to you by TestGorilla’s team of assessment experts: A group of IO psychology, data science, psychometricians, and IP development specialists with a deep understanding of the science behind skills-based hiring.

Sources

Sackett, P. R., Zhang, C., Berry, C. M., & Lievens, F. (2022). Revisiting meta-analytic estimates of validity in personnel selection: Addressing systematic overcorrection for restriction of range. Journal of Applied Psychology, 107(11), 2040.

Highhouse, S., & Brooks, M.E. (2023). Interpreting the magnitude of predictor effect sizes: It is time for more sensible benchmarks. Industrial and Organizational Psychology, 16, 332 - 335.

Related posts

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.