66 Apache Kafka interview questions to hire top talent (+ 26 sample answers)

Hiring skilled data engineers and software developers is crucial to the success of your big data projects. If your team uses Apache Kafka, you need a reliable way to identify true Apache Kafka experts: professionals who’ll drive success and avoid costly mistakes like system bottlenecks, message loss, and inefficient data streaming. This approach involves asking the right structured interview questions. To get you started, we’ve compiled the top 26 interview questions to ask your Apache Kafka candidates.

Top 26 Kafka interview questions to hire the best data engineers and software developers

Below, you’ll find our selection of the best 26 interview questions you can use during interviews to evaluate candidates’ technical knowledge and hands-on experience with Apache Kafka.

We’ve also included sample answers to help you assess responses, even if you’re not a Kafka expert yourself.

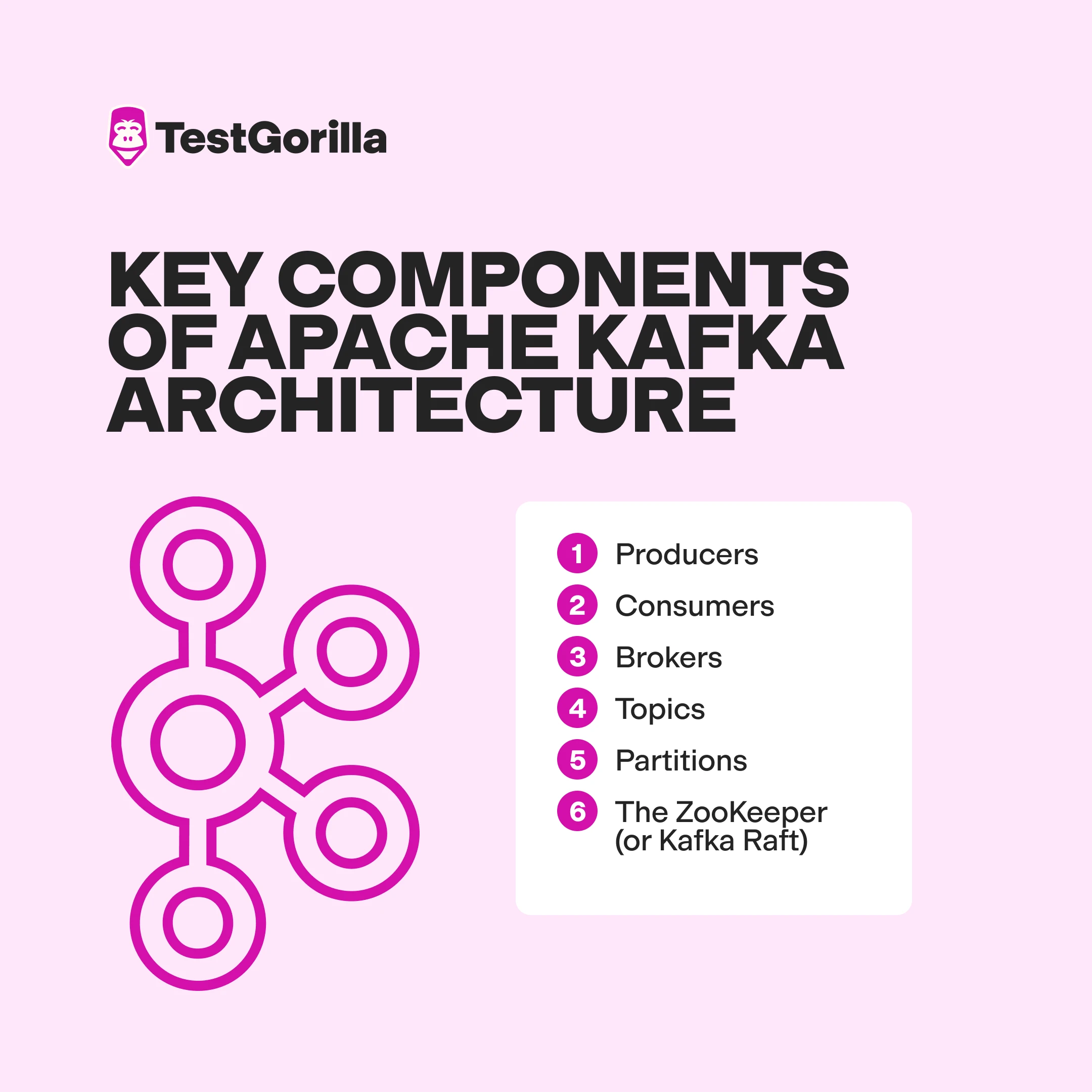

1. Can you describe the basic architecture of Kafka?

Kafka is a distributed, high-throughput, fault-tolerant stream-processing platform. It’s designed with a storage layer and a compute layer. The key components of its architecture are:

Producers – These are applications that publish data to Kafka topics. For example, producers may send website user activity information such as page views or clicks to Kafka.

Consumers – These are applications that subscribe to topics and then process the data. Consumers may work on an analytics dashboard to process user-activity data in real time.

Brokers – These are servers for storing and distributing data. Brokers are like hard drives that hold data over different partitions.

Topics – These are categories or feeds that are used to organize data. "user_activity" or "financial_transactions" are examples of topics.

Partitions – Each topic is divided into different partitions, which allows for parallel processing.

The ZooKeeper (or Kafka Raft) – This manages clusters of Kafka metadata and coordinates brokers.

Pro tip: Look for candidates who can describe how Kafka enables real-time data feeding and stream processing.

2. What is a topic in Kafka?

A topic is a category or feed name to which records are published. They’re partitionable and log-based, enabling the distribution and parallel processing of data across multiple brokers.

For example, a social media app will publish actions such as likes, shares, and comments to separate Kafka topics, ensuring scalability and efficient processing.

3. What is a partition in a Kafka topic?

Partitions are subsections of a topic where data is stored. Partitions enable Kafka to scale horizontally and support multiple consumers by dividing the data across different brokers. This helps with fault tolerance and scalability.

For example, let’s say you have a “website traffic” topic with one million visitors a day, and you set up ten partitions across five brokers. This spreads the amount of data one broker holds and replicates it across other brokers. A consumer group then processes each partition concurrently, while replication ensures no data is lost if a broker goes down.

4. What are producers and consumers in Kafka?

Producers – These are applications that publish data to Kafka topics. They’re responsible for serializing data into JSON or Avro so Kafka can store it and send it to the appropriate topic. For example, a mobile app may send user location data to a Kafka topic.

Consumers – These are applications that subscribe to one or more topics and then process the data. For instance, a real-time fraud detection system might have consumers read transaction data to spot suspicious patterns.

Consumer Groups – These allow multiple consumers to share their workload by reading across different partitions.

5. What is a Kafka broker?

A broker is a server in the Kafka cluster that stores data and serves client requests.

Brokers are responsible for:

Data storage, or holding data on topic partitions

Handling requests from producers to write data and consumers to read data

Message distribution across partitions

Replication, which ensures fault tolerance by replicating partitions across multiple brokers.

For example, say a bank stores transaction logs across multiple brokers using a Kafka cluster. It replicates the data across multiple brokers so if one fails due to a hardware issue, the data isn’t lost.

6. What are ISR in Kafka?

ISR (short for In-Sync Replicas) are replicas of a Kafka partition that are fully in sync with the leader.

They’re critical for ensuring data durability and consistency. If a leader fails, one of the ISRs can become the new leader.

For example, a stock trading platform might keep trade execution logs consistently available by maintaining at least three in-sync replicas per partition.

7. How does partitioning work in Kafka?

Partitioning involves the division of topics into multiple segments across brokers to:

Enable parallel processing – Enables multiple consumers to read data at the same time

Increase throughput – Distributes the load for scalability

Enhance fault tolerance – Ensures resilience through replication

Pro tip: Strong candidates will explain how Kafka assigns partitions to consumers in a group and how partitions ensure load balancing within a cluster.

8. How does Kafka ensure message durability?

Kafka uses several tools to ensure message durability:

Replication – Partitions are replicated across multiple brokers, and copies are stored on different servers to limit lost data.

Write-ahead log (WAL) – Messages are first recorded on logs before they are persisted to the disk. This means if a broker crashes before a message is fully written to the disk, it can be recovered from the log.

Retention policies – Kafka gives administrators the control to set storage parameters over time and size. This helps to ensure only necessary data is retained.

9. Describe an instance where Kafka might lose data and how you would prevent it.

Kafka can lose data in rare situations. Here’s how it happens – and how to stop it.

Risks:

Unclean Leader Elections – A lagging replica becomes leader, missing data.

Broker Failures – Hardware dies before replication finishes.

Config Errors – Weak settings skip safety checks or incorrect topic configurations.

Prevention:

Replication Factor: Set to 3+ for redundant copies.

Min.insync.replicas: Require 2+ in-sync replicas before acknowledging writes.

Acks=All: Require confirmation from all replicas before acknowledging writes.

Backups: Snapshot data regularly.

Monitoring: Watch for replica lag or broker downtime.

10. What is the role of the server.properties file?

The server.properties file is the primary configuration file for a Kafka broker. It includes settings related to the broker, network, logs, replication, and other operational parameters.

Pro tip: Look for candidates to mention specific configurable properties that are crucial for setting up and managing Kafka brokers, including:

broker.id – Unique ID for each broker

log.dirs – Storage location

zookeeper.connect – Manages cluster metadata

11. How do you install Kafka on a server?

To install Kafka on a server, an engineer would need to:

Download Kafka – Download the latest Kafka release from the official website.

Configure ZooKeeper – If you're using ZooKeeper for coordination (recommended), you must have a running ZooKeeper ensemble. Configure the zookeeper.connect property in the Kafka server configuration file (server.properties) to point to your ZooKeeper ensemble.

Configure Kafka – Modify the server.properties file and adjust to your required settings.

Start the Broker – Execute the kafka-server-start.sh script (or kafka-server-start.bat on Windows) to start the Kafka broker.

For example, on a Linux server, you’d grab Kafka 3.6 from the Apache site, unzip it, and ensure ZooKeeper’s running (e.g., bin/zookeeper-server-start.sh). Then, you’d edit server.properties to set broker.id=1 and log.dirs=/data/kafka, then start Kafka with bin/kafka-server-start.sh. If it fails, check the logs for Java heap issues.

12. How would you secure a Kafka cluster?

Top candidates would use multiple layers of security and strategies, such as:

Encryption:

SSL/TLS for encryption of data in transit

Authentication:

SASL/SCRAM for authentication

A Kerberos integration

OAuth mechanisms

Authorization:

Network policies for controlling access to the Kafka cluster

ACLs (Access Control Lists) for authorizing actions by users or groups on specific topics

For example, a financial institution might use TLS and ACLs to prevent unauthorized access to sensitive customer data.

13. How do you monitor the health of a Kafka cluster?

Here are some ways to monitor the health of clusters:

Checking broker states

Verifying consumer group status

Assessing replication factors

Checking logs for errors using JMX (Java Management Extensions) to monitor performance metrics

Pro tip: Top applicants will know how to use the Kafka command-line tools such as kafka-topics.sh to view topic details and kafka-consumer-groups.sh to get consumer group information.

14. What tools can you use for Kafka monitoring?

Skilled candidates will mention several tools, including:

Kafka's own JMX metrics for in-depth monitoring at the JVM level

Prometheus with Grafana for visualizing metrics

Elastic Stack (Elasticsearch, Logstash, Kibana) for log aggregation and analysis

Datadog, New Relic, or Dynatrace for integrated cloud monitoring are used mainly in commercial platforms for dashboards and anomaly detection

For example, a music streaming service might use JMX to monitor broker latency, Prometheus to scrape metrics every ten seconds, and Grafana to graph “songs-played” topic throughput. If a broker slows down, Kibana’s log view (via Elastic Stack) will pinpoint the error and alert the music streaming service that the disk is full.

Pro tip: Use our Elasticsearch test to evaluate candidates’ proficiency with Elasticsearch.

15. How would you upgrade a Kafka cluster with minimal downtime?

Strong candidates will describe their hands-on experience with Kafka. Here’s what their answers might include:

Perform a rolling upgrade – Stop brokers gracefully, updating them one at a time to prevent downtime.

Backup configurations & data before upgrading – Ensure all data and metadata are backed up safely.

Test the upgrade in a staging environment before deploying.

Monitor cluster performance closely during the upgrading process.

Pro tip: Look for candidates with a strong understanding of version compatibility and configuration changes (e.g., 2.8 to 3.0 shifts) between Kafka versions.

16. Explain the concept of Kafka MirrorMaker.

Kafka MirrorMaker is a tool used for cross-cluster data replication. It enables data mirroring between two Kafka clusters. The primary applications of MirrorMaker include:

Disaster recovery, where data is backed up in another cluster

Geo-replication, which ensures low-latency access for users in different locations.

MirrorMaker works by using consumer and producer configurations to pull data from a source cluster and push it to a destination cluster.

For example, a global video streaming service might use MirrorMaker to synchronize content across multiple countries and ensure a seamless user experience.

17. What is exactly-once processing in Kafka?

Here are the key components of this feature:

No duplicate messages – Prevents multiple processing of the same message

No data loss, even in failure scenarios – Ensures messages are never lost

Transactional guarantees, which use Kafka’s transactional APIs for:

Idempotent Producers

Transactional Consumers

Transaction Coordinator

For example, a payments app might use exactly-once processing to prevent duplicate transactions from being logged.

18. What might cause latency issues in Kafka?

There are several potential causes of latency, such as:

High volume of network traffic or inadequate network hardware

Disk I/O bottlenecks due to high throughput or slow disks

Large batch sizes or infrequent commits causing delays

Inefficient consumer configurations or slow processing of messages

Pro tip: Look for candidates who can explain how they’d diagnose and mitigate these issues, such as by adjusting configurations and upgrading hardware.

19. How can you reduce disk usage in Kafka?

Some of the best ways to reduce disk usage are to:

Adjust log retention settings to keep messages for a shorter duration

Use log compaction to only retain the last message for each key

Configure message cleanup policies effectively

Compress messages before sending them to Kafka

20. What are some best practices for scaling a Kafka deployment?

To scale a deployment successfully, developers should:

Size and partition topics to distribute load evenly across the cluster

Use adequate hardware to support the intended load

Monitor performance and adjust configurations as necessary

Use replication to improve availability and fault tolerance

Use Kafka Streams or Kafka Connect for integrating and processing data at scale

21. What are the implications of increasing the number of partitions in a Kafka topic?

Increasing partitions can improve concurrency and throughput but also has its downsides, as it might:

Increase overhead on the cluster due to more open file handles and additional replication traffic

Lead to possible imbalance in data distribution

Lead to longer rebalancing times and make managing consumer groups more difficult.

Pro tip: Strong candidates will know that careful planning and testing before altering partitions is key.

22. How do you reassign partitions in Kafka?

Reassigning partitions involves:

Using kafka-reassign-partitions.sh to generate a reassignment plan

Executing the reassignment JSON file

Balancing partitions across brokers

23. What are some security risks when working with Kafka?

Some of Kafka’s security risks are:

Unauthorized data access

Data tampering

Service disruption

Pro tip: Take this question further by asking candidates how to mitigate these risks. They should be able to tell you that it’s essential to secure network access to Kafka, protect data at rest and in transit, and put in place robust authentication and authorization procedures.

24. How does Kafka support GDPR compliance?

Kafka ensures robust data protection thanks to its:

Data encryption features, both in transit using SSL/TLS and at rest

Ability to handle data retention policies

Deletion capabilities that can be used to comply with GDPR’s right to erasure

Logging and auditing features to track data access and modifications

Pro tip: Need to evaluate candidates’ GDPR knowledge and their ability to handle sensitive data? Use our GDPR and Privacy test.

25. What authentication mechanisms can you use in Kafka?

Kafka supports:

SSL/TLS for encrypting data and optionally authenticating clients using certificates

SASL (Simple Authentication and Security Layer) which supports mechanisms like GSSAPI (Kerberos), PLAIN, and SCRAM to secure Kafka brokers against unauthorized access

Integration with enterprise authentication systems like LDAP

26. How can you use Kafka’s quota feature to control client traffic?

Kafka quotas can be set to limit the byte rate for producing and consuming messages, which prevents the overloading of Kafka brokers by aggressive clients.

There are three types of quotas:

Producer Quotas – Limits the rate at which a producer sends messages

Consumer Quotas – Restricts how quickly consumers can fetch data.

Replication Quotas – Controls the bandwidth used for replicating data across brokers.

Pro tip: Candidates might also discuss setting quotas at the broker level to manage bandwidth and storage and explain how this can help maintain the Kafka cluster’s stability.

40 more Kafka interview questions you can ask candidates

Looking for more questions you can ask candidates to evaluate their proficiency in Apache Kafka?

Below, you can find 40 more questions you can use during interviews, ranging from easy (at the top), to more challenging (towards the bottom of the list).

How do you use Kafka with Spark?

What are some common mistakes developers make with Kafka?

What are some alternatives to Kafka?

Explain the role of ZooKeeper in Kafka.

What is a Consumer Group in Kafka?

What are offsets in Kafka?

How do you configure a Kafka producer?

How do you configure a Kafka consumer?

How does Kafka Connect work?

How would you handle Kafka’s logs?

How does Kafka handle failover?

What is log compaction in Kafka?

How can you optimize Kafka throughput?

How do you handle large messages in Kafka?

How do you troubleshoot network issues in Kafka?

How would you handle an instance where consumers are slower than producers?

What steps would you take if your Kafka cluster unexpectedly goes down?

How would you retrieve old messages in Kafka?

How do you ensure message order in a Kafka topic?

How do you manage offsets for a consumer in Kafka?

How do you produce and consume messages to Kafka using the Java API?

What are some other languages that Kafka supports?

How do you use Kafka with Hadoop?

Explain how transactions are handled in Kafka.

How do you implement idempotence in Kafka?

What is the role of timestamps in Kafka messages?

How do you encrypt data in Kafka?

How do you audit data access in Kafka?

What is SASL/SCRAM in Kafka?

Explain how to configure TLS for Kafka.

How do you protect sensitive data in Kafka?

What are the best practices for Kafka data modeling?

How do you handle data retention in Kafka?

What are the limitations of Kafka Streams?

How does Kafka fit into a microservices architecture?

Explain the role of Kafka in a cloud environment.

How do you back up Kafka data?

What is a dead letter queue in Kafka?

How do you use Kafka for event sourcing?

Can you use Kafka for batch processing?

If you need more ideas, check out our Spark interview questions and our data engineer interview questions.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

Use the right skills assessments to hire top Kafka talent

Assessing applicants’ Kafka skills quickly and reliably is essential for making the right hire. For this, you need to be equipped with the right skills tests and interview questions.

This way, you’re not leaving anything to chance and can be sure that the person you hire will have what it takes to contribute to your big data projects.

To chat with one of our experts and find out whether TestGorilla is the right platform for you, simply sign up for a free demo. Or, if you prefer to test our tests yourself (pun intended), check out our free forever plan.

Related posts

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.