Revisiting the validity of different hiring tools: New insights into what works best - Part 2

Science series materials are brought to you by TestGorilla’s Assessment Team: A group of IO psychology, data science, psychometric experts and assessment development specialists with a deep understanding of the science behind skills-based hiring.

Predicting the future is a difficult task, usually done only by skilled oracles, recruiters, and hiring managers. You read that right. Employers looking to hire top candidates are aiming to predict the future success of a candidate in their role. While there are no fortune tellers to help you make the right hire, there is something even better – strong, empirical science.

In our previous blog post on the criterion-related validity of different hiring tools, we reported on and discussed average validity coefficients calculated from numerous studies of different hiring tools and discussed what the results told us about the effectiveness of these tools and what that means for your hiring process.

In this blog post, we’ll dive deeper into the science and explain how you can use these insights to improve your hiring process.

Table of contents

- Is there more to average validity coefficients?

- How is this nuance communicated in a study?

- How can you take this nuance into account in your hiring process?

- How can you deal with this uncertainty?

- 5 ways to maximize the validity of your hiring process

- Understanding the validity of your hiring tools will help you make better hiring decisions

- Sources

Is there more to average validity coefficients?

While very useful, average validity coefficients do not capture the variability of the results found by individual studies. For instance, the mean validity of .42 found for structured interviews by Sackett et al. (2022) is the average of the validity coefficient values reported by different studies included in their meta-analysis. A meta-analysis is a super-study that summarizes the results of tens or hundreds of different studies. Some of the studies analyzed in the paper reported validity coefficients higher than the average, and some reported values lower than the average.

A credibility interval is useful in efficiently summarizing this variability between studies. The credibility interval is a range of values between an upper and lower bound that covers the majority of the results of the studies included in a meta-analysis. An 80% credibility interval captures 80% of the values around the average observed from many different studies.

An example can help show how these credibility intervals offer a more comprehensive picture. Imagine you're trying to find out how tall people are in your city. You can make some measurements and calculate that the average height is 5'7". This value would correspond to the average value reported in a meta-analytic study.

To get a more inclusive picture, you can conduct additional analyses and conclude that 80% of people are between 5'2" and 6'2" tall. This range would correspond to the 80% credibility interval reported in the meta-analytic study. By including both this interval and the average value, you’re able to get a much more precise and nuanced picture.

How is this nuance communicated in a study?

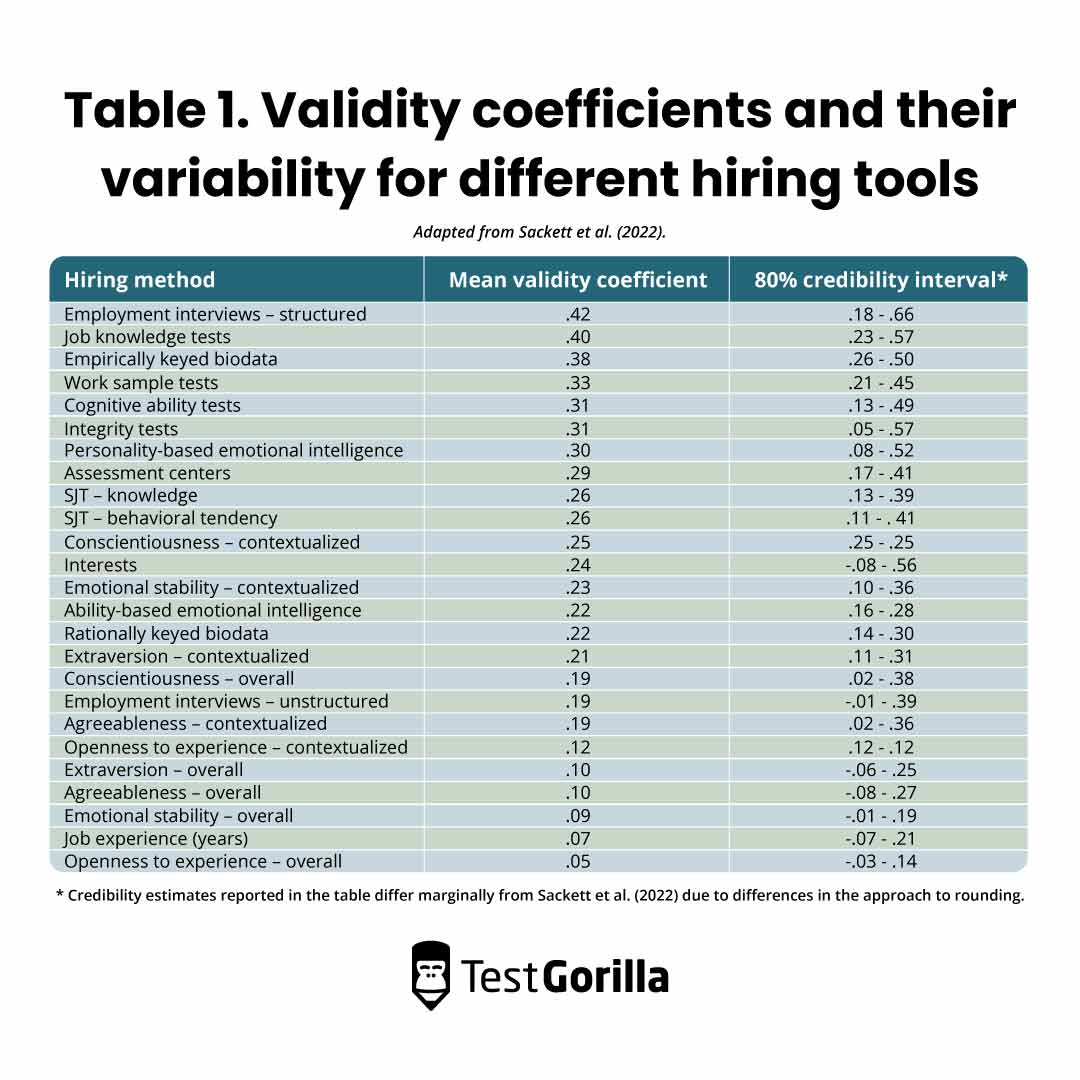

Similar to our example, Sackett et al. (2022) reported both the average values and the 80% credibility intervals to provide a nuanced picture of the validity coefficients of different hiring tools. Table 1 shows the validity coefficients found by Sackett and colleagues, along with their 80% credibility intervals.

For example, structured interviews, which is at the top of the list in terms of a mean validity of .42, have an 80% credibility interval ranging from .18 to .66. Thus, the validity of structured interviews should really be viewed as the “validity coefficient is .42, plus or minus .24”. This conveys a different message than simply “validity coefficient is .42”.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

How can you take this nuance into account in your hiring process?

Large variability around the average indicated by wide credibility intervals usually means uncertainty. The wider the credibility interval, the less certain we can be about the exact validity coefficient of a hiring tool in our context. The organizational context, the nature of the role, and the overall goal of the selection process can all impact the exact validity coefficient in your application of the hiring tool.

Take, for example, structured interviews. This hiring tool has an 80% credibility interval between .18 and .66, with an average estimate of .42. To better understand the variability of the estimate, it pays to consider this hiring tool closely.

A single structured interview can cover multiple skills and competencies, ranging from general ones like communication or command of English to role-specific skills like negotiation. When skills scored during the interview are similar to the skills needed for success in a role, it is more likely that this hiring tool will reach above-average validity estimates. Moreover, the validity of structured interviews is also likely to vary depending on how well the interview has been designed and implemented (how the questions are formulated, how interviews are scored, how well interviewers are trained, etc.).

Another good example of the importance of context are personality tests. More specifically, those that assess the Conscientiousness (C) trait from the OCEAN personality test.

Looking at Table 1, you can see that researchers report results for two different measures of this trait: overall and contextualized. An overall measure of personality assesses candidates’ personality in general, while a contextualized measure assesses candidates’ personality at work. By introducing this distinction, Sackett et al. (2022) were able to narrow the credibility intervals so much that the contextualized measure of Conscientiousness has an average validity of .25 – with both the lower and the upper bound of the 80% credibility interval sitting at .25 as well.

Other researchers have shown that the validity coefficient of Conscientiousness can change depending on the complexity of the role a person is hired for. For example, in roles with high complexity, Conscientiousness is less important than in roles with moderate or low complexity.

The take-home message is that while these mean meta-analytic validity coefficients can provide general insights into the efficacy of different hiring tools, there is rarely a one-size-fits-all approach to hiring. You should mix and match hiring tools to balance each tool's benefits for the specific role and context you are hiring for.

How can you deal with this uncertainty?

You can take a few steps to deal with this uncertainty in the hiring process.

First, when comparing different hiring methods, pay attention to both the lower end of the 80% credibility interval and the average validity coefficient. This approach can help you identify predictors that have similar validities in different contexts or those with lower downside risk.

For example, Sackett and colleagues found that structured interviews have higher mean validity than empirically keyed biodata (.42 versus .38). However, biodata has a higher value for the lower end of a credibility interval (.26 versus .18). A risk-averse employer might prefer the predictor with less downside risk, and thus focus on the lower-end credibility value in identifying potential predictors.

Second, you can run a criterion-related validation study yourself to examine the relationship between the hiring tools you use and relevant job criteria for specific jobs or job families. Such a study can provide you with data-driven insights that are directly applicable to your specific situation. However, large sample sizes are needed to get meaningful insights from such a study (read more here). Organizations that don’t have a large number of hires in a position would be unable to do this type of study. To help all our customers, TestGorilla has a range of ongoing criterion-related validity initiatives that include small and large organizations. You can learn more about the opportunity here.

Lastly, you can look at the original meta-analytic studies included in the Sackett et al. (2022) meta-analysis and find studies that most closely describe your hiring process and situation. These studies often break down results based on job type, different types of performance criteria, and other factors that might help you make more informed decisions about the best hiring method for your situation (see, for example, this meta-analysis on the predictive validity of conscientiousness). By generalizing the validity of hiring tools to situations that are closer to yours, you can be more confident that the pre-employment tests you are using are valid for the positions you are testing for.

5 ways to maximize the validity of your hiring process

1. Invest in the design of your selection procedures

Crafting a high-quality selection procedure is no small feat– and reading this piece and other blogs in our science series is a good place to start. Purposefully building a high-quality selection process means that you carefully pick the hiring tools, plan out different stages of the process, and develop a standardized way to evaluate and rank candidates. Once this process is implemented, it ensures that every decision in the hiring funnel is supported by a strong rationale and data. Additionally, it is likely to bring you closer to above-average validity estimates.

2. Use reliable and valid hiring tools

You cannot make a good cake with bad ingredients, nor can you craft a high-quality hiring process with poor hiring tools. Using psychometrically sound hiring tools helps to ensure that the hiring decisions you make are based on trustworthy data.

At TestGorilla, we invest a lot of time and resources to develop high-quality assessments. By applying rigorous science every step of the way, we are able to deliver high-quality, reliable, and valid hiring tests. You can read more about our process here.

Every organization and role is unique, and sometimes, you may need to develop your own custom test internally to suit your needs. When building a custom hiring tool, validity and reliability are no less important than when using an existing one. Following best practices when developing custom tools, like the ones for conducting a structured interview, can help you land in the range of above-average validity coefficients.

3. Ensure the tools you use are job-relevant

There are many ways to evaluate how good someone is in their job. By proactively thinking about what the job entails and how performance will be evaluated, you can ensure that you’re using the most relevant hiring tools in the selection process. Science is clear that the more related a hiring tool is to the actual job a candidate is hired to do, the better it is in differentiating between good and bad candidates. Using a job-relevant hiring tool can mean a difference between missing a good candidate and hiring them. Moreover, using job-relevant hiring tools is necessary to demonstrate the legal defensibility of your hiring process.

4. Consider the role you’re hiring for

Roles with different tasks and responsibilities can benefit from different hiring tools. Take some time to assess what everyday work will look like for the new hire. It also pays to understand what the first few days, weeks, and months will look like for these individuals. You can use job task analysis to get a comprehensive picture of the role you’re hiring for. Once you understand the requirements of the role, you can combine and select different hiring tools to maximize the validity of the process and avoid an overlap between them.

5. Consider the type of candidates you’re targeting for a role

When opening a role where you expect candidates with a lot of experience, knowledge, and relevant skills to apply, consider including role-specific job knowledge and skills tests. When opening a role where you expect applicants mainly to be fresh graduates, starters, or people making a career shift, consider assessing more universal skills such as problem solving, time management, or communication. With a test library comprising nearly 400 tests, we have you covered. Our upcoming behavioral competency framework can help you identify and assess universal competencies relevant to many different roles.

Lastly, adding a personality assessment like the OCEAN test or contextualized measures such as the Culture add or Motivation tests can help you understand the unique way in which candidates can contribute to your organization.

Understanding the validity of your hiring tools will help you make better hiring decisions

The science of hiring has evolved to provide a nuanced understanding of the effectiveness of different hiring tools. Organizational context, hiring goals, and ways in which performance is measured are all important factors that influence the validity and utility of a hiring tool. By carefully selecting hiring tools to match the specific needs of your organization and the roles you are hiring for, you can ensure you’re making sound hiring decisions.

Sources

Sackett, P. R., Zhang, C., Berry, C. M., & Lievens, F. (2022). Revisiting meta-analytic estimates of validity in personnel selection: Addressing systematic overcorrection for restriction of range. Journal of Applied Psychology, 107(11), 2040.

Sackett, P. R., Zhang, C., Berry, C. M., & Lievens, F. (2023). Revisiting the design of selection systems in light of new findings regarding the validity of widely used predictors. Industrial and Organizational Psychology, 1-18.

Wilmot, M. P., & Ones, D. S. (2019). A century of research on conscientiousness at work. Proceedings of the National Academy of Sciences, 116(46), 23004-23010.

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.