How to prepare for a criterion-related validation study

Science series materials are brought to you by TestGorilla’s team of assessment experts: A group of IO psychology, data science, psychometricians, and IP development specialists with a deep understanding of the science behind skills-based hiring.

Criterion-related validity is essential to ensuring that pre-employment tests are predictive of an employee's job performance. Criterion-related validation studies help to scientifically evaluate and confirm what we typically assume in the selection context: That candidates who perform well on the assessment will perform well on the job if selected.

Conducting criterion-related validation studies can help to ensure that you are making quality hires, which should ultimately decrease turnover, increase individual, team, and organizational performance, and save you time and money spent on selection. Additionally, these studies provide hard evidence that your selection procedure measures job-related attributes and is defensible.

In this science series article, we’ll expand on ‘A brief introduction to: Test validation’ to dive more in-depth into criterion-related validation studies: what they are, how to prepare for them, and how they benefit both your organization and your employees.

What are the key concepts in criterion-related validation?

Criterion-related validation

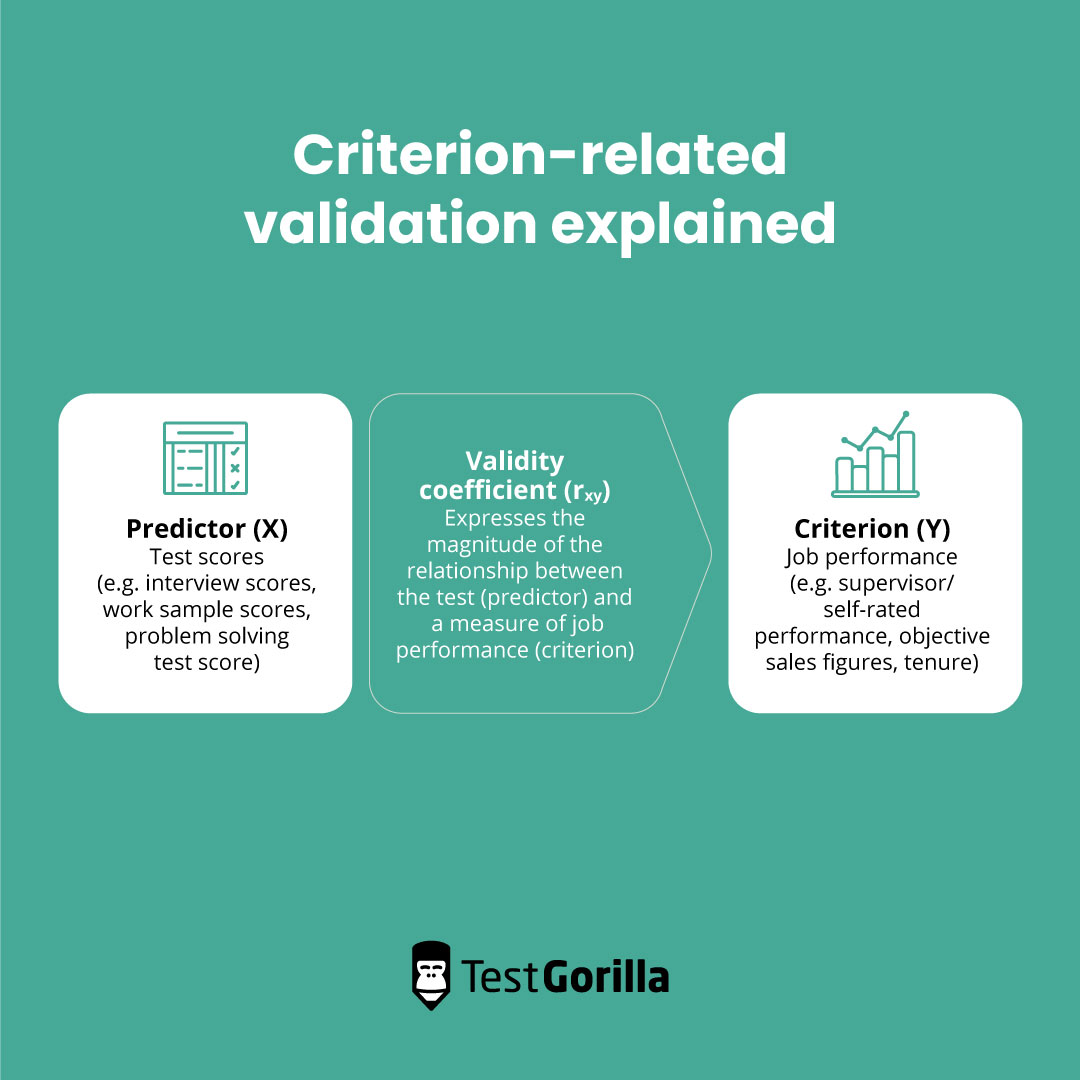

In criterion-related validation, we want to determine if we can make accurate inferences (or predictions) about candidates' expected job performance based on the scores they obtain on pre-employment tests. We evaluate this by looking at the extent to which test results (called “predictors” e.g., interview scores, scores on a problem solving test, scores on a work sample test) align with some important work-relevant behavior and/or work outcome measures (called “criteria” e.g., job performance ratings, tenure data, sales figures).

The extent to which the test scores are related to scores on a work-relevant outcome measure (e.g., performance ratings, turnover) is typically expressed as a correlation coefficient, called a validity coefficient.

Predictive validity and concurrent validity

There are two types of criterion-related validation strategies: Predictive validity and concurrent validity.

Predictive validity

Predictive validity is the degree to which candidates’ test results accurately predict an important future work-relevant behavior and/or work outcome. With this strategy, you use candidates' test score data at the time they apply for the job (predictors) as well as data on their job performance (criteria) after they have been hired (i.e., there is a time lapse between the collection of predictor and criterion data).

For example, if a candidate scores highly on TestGorilla’s Sales Management test, you might predict that they will perform well in a sales manager role at your organization. If high scores on the Sales Management test correlate with being a top sales manager eight months after hiring, a test can be said to have good predictive validity.

Concurrent validity

Concurrent validity refers to the degree to which test results align with another test from approximately the same time.

With this strategy, current employees’ test score data (predictors) and job performance data (criteria) from roughly the same time are used (i.e., both types of data are collected when employees are already in a job).

For example, you could give the Sales Management test to your existing sales managers and see how it aligns with their current sales performance. If the test scores correlate highly with the performance of your current sales managers, it suggests the Sales Management test has good concurrent validity.

What are the benefits of conducting criterion-related validation studies?

In the selection context, experts often refer to criterion-related validity as the “ultimate” form of validity. It is a powerful way to examine whether our tests are delivering value for our customers.

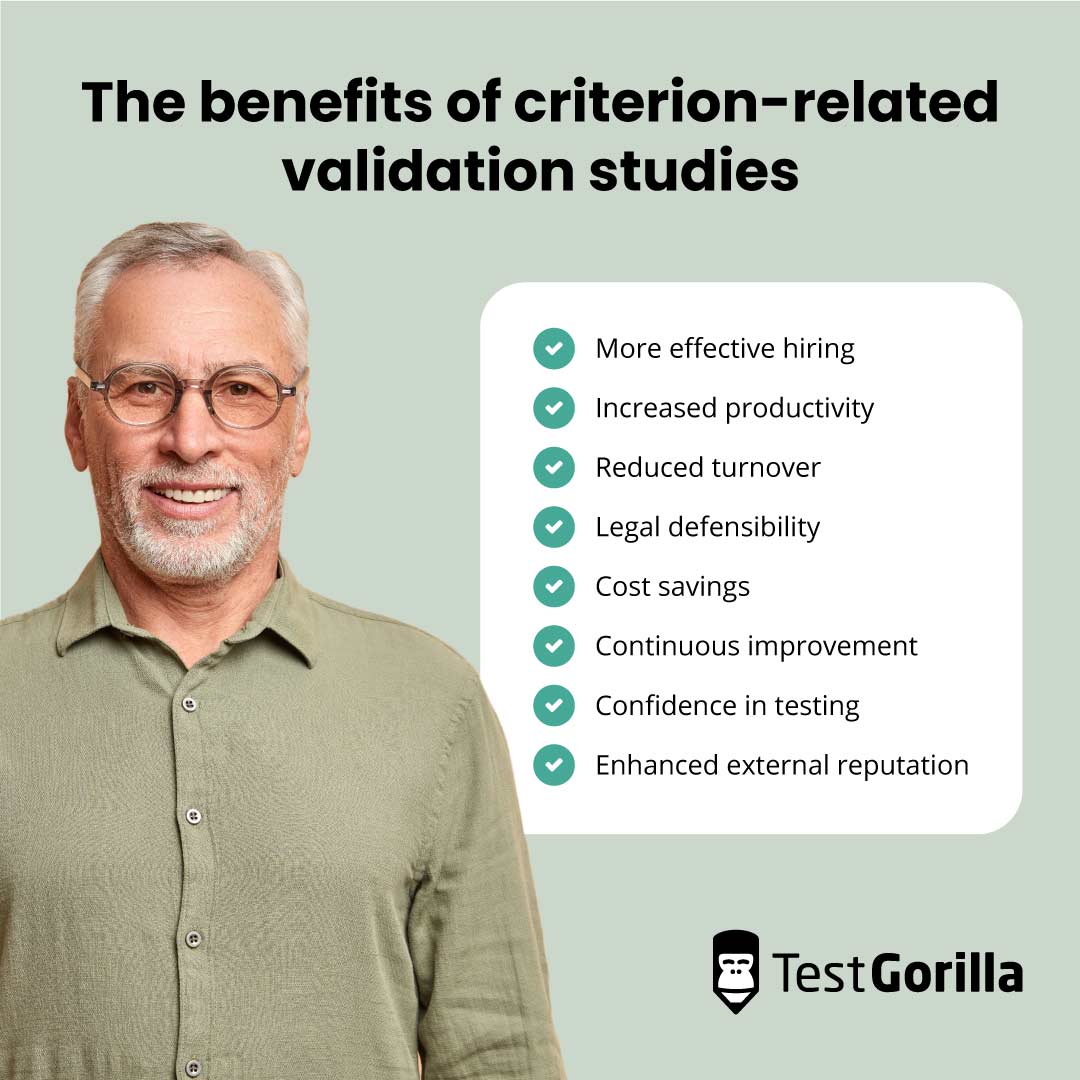

Criterion-related validation studies can provide scientific evidence that the tests and assessments you use to hire employees directly relate to job success, providing you with information to make smart, data-driven hiring decisions that reduce the time and cost-per-hire.

Other benefits include:

Increased productivity: When you are sure your tests predict job performance, you are more likely to identify candidates who will excel at their job.

Reduced turnover: Better prediction of who will succeed on the job means you are more likely to select candidates who are a good fit.

External reputation: Using validated tests for hiring signals to candidates that you are committed to fair, effective, and data-driven hiring.

Continuous improvement: Validation reports may identify areas where your testing or hiring process could be improved, providing you with actionable insights to inform changes.

Reducing legal risk: Validation study results can provide evidence that a test is covering job-related attributes or skills, which is important to support the legal defensibility of your hiring practices.

Now that we understand the key concepts in criterion-related validation and the benefits, let’s break down the steps necessary to prepare for a criterion-related validity study.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

5 Steps for preparing a criterion-related validation study

Step 1: Identifying job-relevant predictors

The key to understanding predictors and criteria happens before the study even begins through a careful job analysis.

Job analysis is a foundational step for many Human Resources functions as it entails a detailed look into what is done and what needs to be achieved in a particular job. A job analysis provides an understanding of the critical tasks, behaviors, equipment, and conditions for the role, which informs what knowledge, skills, and abilities an employee must have to be successful. This information should then be used to inform recruitment and selection, learning and development, performance management, and much more.

Once the required knowledge, skills, and abilities for the job are understood, they can be leveraged to select and/or design predictors (e.g., interviews, work samples, pre-employment tests).

For example, to hire a sales associate, you may create an assessment including TestGorillas’ B2B Lead Generation test, HubSpot CRM test, and Communication test. You could also include a behavioral interview to further assess their skills. During the interview you could ask potential hires about how they handle difficult clients or maintain positive relationships. Scores on all these tests serve as predictor data in criterion-related validation studies.

Step 2: Identifying job-relevant criteria

In the selection context, job performance is most often the criterion of interest. Job performance includes both job-relevant behaviors (how the job is done) and job-relevant outcomes (what is achieved on the job) that constitute success for an individual employee (e.g., number of sales, quality of work).

During job analysis, the specific tasks included in the job description should ideally be tied to measurable criteria that can be used for performance management – and later as criteria in criterion-related validation studies Such criteria can be objective (e.g., sales volume, productivity metrics, error rates, project completion) or subjective indicators of performance (e.g., supervisor/ peer/ subordinate/ self-ratings of performance).

Other potentially relevant criteria could also be considered in criterion-related validity studies, such as employee tenure, training performance, job satisfaction, team performance (e.g., supervisor ratings of team performance, number of targets met), or organizational performance (e.g., profit, overall sales).

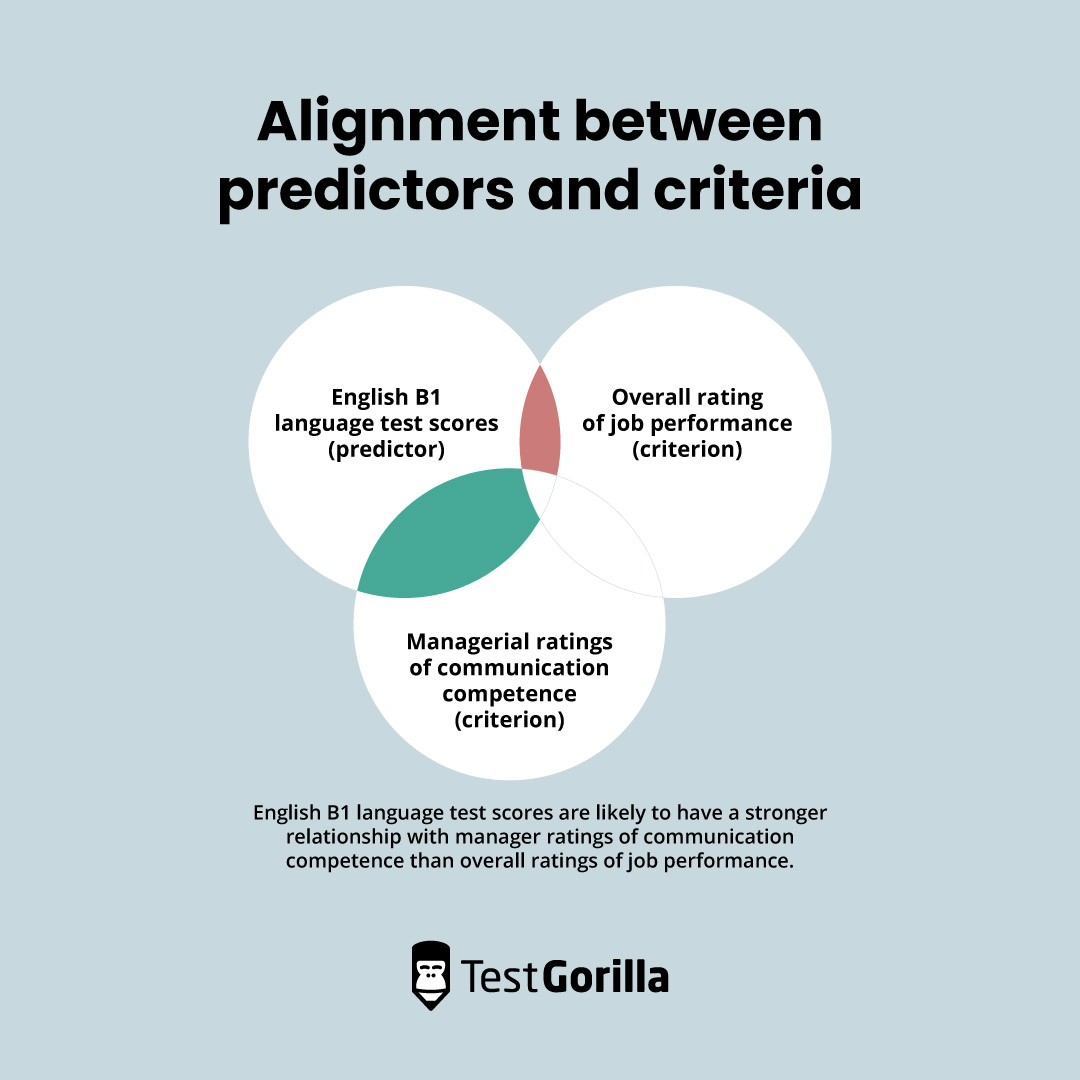

Measures of job performance can be composite (i.e., one single combined rating to account for an employee’s performance) or can constitute multiple, more specific ratings. An example of a composite, or overall, measure of job performance is a supervisor rating of an employee’s overall job performance on a scale of one to five. A more specific rating would be a supervisor rating of an employee’s communication competence.

It is also important to consider the alignment between predictors and criteria in criterion-related validation studies. For example, you’re more likely to detect a substantial relationship between a specific predictor (e.g., scores on an English B1 language test) and a specific, well-matched criterion (e.g., managerial rating of English communication competence) than a very broad (e.g., an overall rating of performance) or mismatched criterion (e.g., number of coding errors).

All predictors and criteria are flawed to varying degrees: none are perfectly reliable or valid. Nevertheless, when identifying criteria for a validation study, it is crucial to identify criteria that are as job-relevant, reliable, valid, fair, and as unbiased as possible. Many validation studies have failed due to unreliable and invalid criteria rather than unreliable and invalid predictors.

Step 3: Planning your criterion-related validation study

In planning your criterion-related validation study, it's crucial to make several key decisions upfront.

First, you must choose between a predictive and concurrent validity study. This choice depends on whether you're more interested in forecasting future job performance based on current test scores (predictive validity) or if you want to understand how the test scores of current employees relate to their present job performance (concurrent validity). We anticipate that most of our customers will be interested in conducting predictive validity studies.

Next, you'll need to determine the necessary sample size for your study. A larger sample size allows for more sophisticated statistical techniques to be used and typically provides more reliable and generalizable results. That said, it also requires more resources. You'll need to balance these factors in your decision-making.

We generally recommend a minimum of 100 employees in a particular job role or job family who have completed TestGorilla tests and have (or for which you can obtain) criterion scores. Nonetheless, more simple analyses with smaller samples may be possible.

You must also identify what you'll need in terms of:

Personnel (e.g., if additional data needs to be collected you may need a project manager, the involvement of human resources and the communications department, and input from managers and employees),

Data (e.g., use of existing data, collecting new data, any permissions that need to be sought),

Time to conduct the study. For instance, predictive validity studies, which require you to wait for future performance data, tend to take longer than concurrent validity studies.

Outline a timeline that accommodates your needs, your resource availability, and the type of study you've chosen. These decisions will set the stage for a successful validation study.

Step 4: Gathering predictor and criterion data

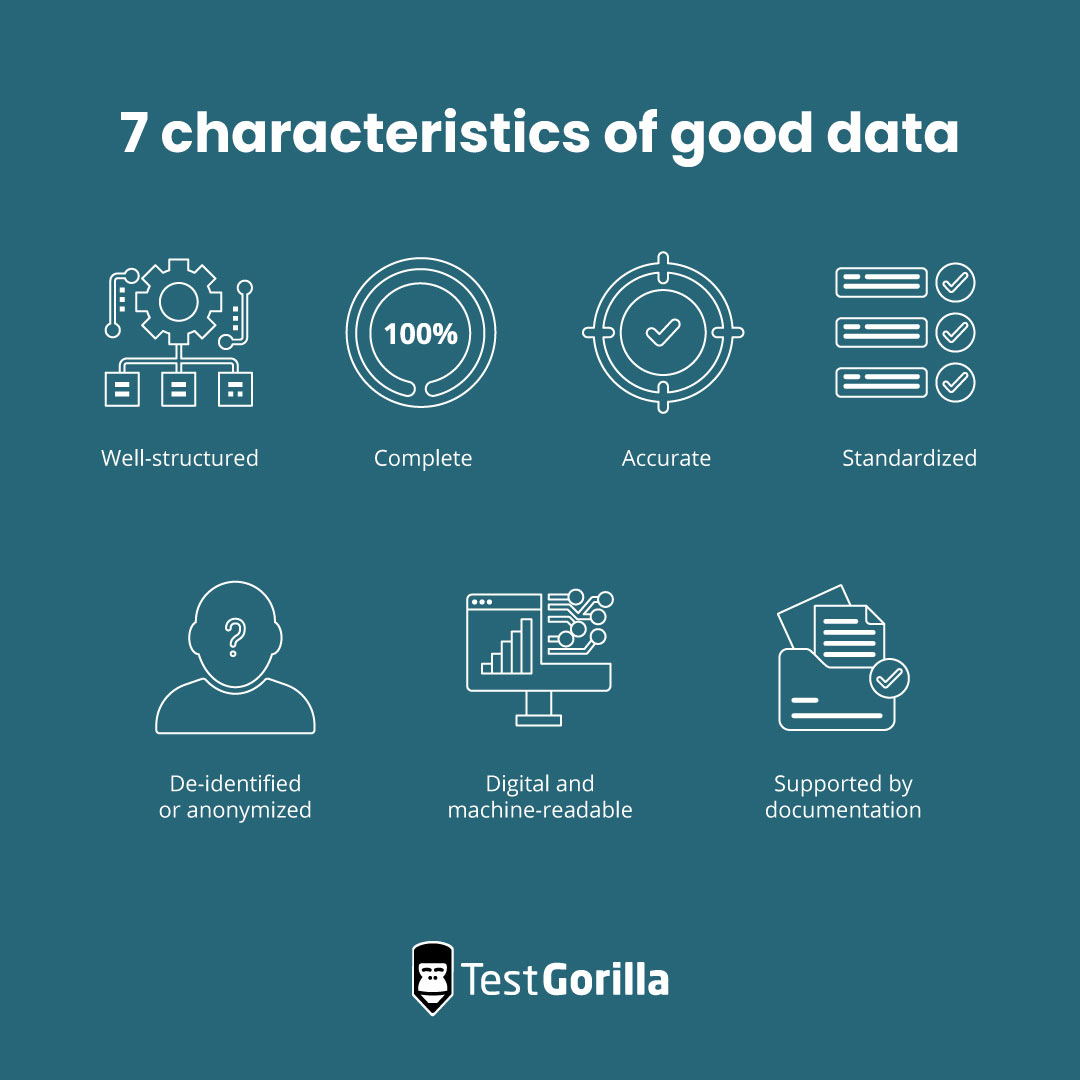

Good data is the backbone of any criterion-related validation study. You will need to gather two main types of data: predictor scores and criterion scores. The process of data collection must be organized and systematic to ensure the data is well-structured, standardized, accurate, and complete.

Predictor data can usually be downloaded easily from an online testing platform like TestGorilla or an applicant tracking system. Likewise, criterion data can typically be sourced from your performance management system or human resources information system. Both these sets of data can be exported in a machine-readable format (e.g., .csv or .xlsx files) that facilitates easy data matching, storage, sharing, and analysis.

Ideally, clear documentation should exist that explains what each variable represents, how it was collected, and any other information necessary to understand and analyze the data. Remember, inaccurate or incomplete data can lead to misleading results and misguided decisions, which ultimately diminish the value of a criterion-related validity study.

Lastly, data privacy and protection should be front and center in any criterion-related validity study. Organisations need to adhere to the applicable data privacy laws, regulations (e.g., GDPR, internal policies, contractual obligations) and best practices. To comply with data privacy regulations and best practices, data should be de-identified or anonymized before it is shared. This means removing or replacing any information that could be used to identify an individual participant, such as names or ID numbers. Criterion-related validity studies focus on aggregated results, meaning that no individual responses will be identifiable.

By prioritizing accurate data collection and privacy, you can build a robust and ethically sound validation study.

Step 5: Participating in TestGorilla criterion-related validation initiatives

If your organization has the in-house expertise, you can certainly conduct the analyses for a criterion-related validation study internally. However, it's worth noting that as part of our ongoing efforts to improve the accuracy and fairness of our tests, we’re conducting a series of criterion-related validation studies to better understand the impact of TestGorilla’s assessments on work-related outcomes.

Our Assessment team, which consists of experts with a wealth of experience in test development, psychometrics, and validation studies, will be conducting these studies.

Whether you have a large (more than 100) or a small number (less than 100) of hires, there are various types of initiatives that your organization could potentially participate in. You only need to have tested and hired candidates using TestGorilla assessments and have (or be willing to gather) performance data on these hires.

Organizations that sign up for the initiative and meet the qualifying criteria for large validation studies will receive a scientific report documenting the results of the research along with other observations and recommendations.

Participating in TestGorilla’s criterion-related validation initiatives can make the process of conducting a validation study quicker, smoother and more efficient; support our ongoing test development and refinement efforts; and provide you with valuable insights to improve your hiring process.

If you’re interested in being a part of our validation study initiative, please complete this form.

Discover TestGorilla's science-backed pre-employment tests

Our pre-employment screening tests facilitate a standardized, multi-measure, and holistic hiring process. Developed by experts and trusted by over 10,000 employers globally, TestGorilla’s test library is a tried and trusted tool for minimizing selection error and unconscious bias during hiring. These science series blogs are here to help you understand the science behind and value of our assessments, and use them correctly.

If you want to learn more about the science behind TestGorilla, check out other blogs from our science series:

Related posts

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.